Istio - Mode : Sidecar, Ambient

1. Istio란

- Istio는 먼저 그리스어로 항로 또는 길잡이를 의미하는 ἴσθμιος (isthmios) 에서 유래되었습니다.

- 기술적인 관점에서는 Service Mesh 기술로 흔히 알려져있으며, Micro Service간의 통신을 관찰, 관리, 보안까지 강화해주는 역할을 합니다.

- L7 기능으로 트래픽 관리, 서비스 간의 인증, 로깅, 모니터링, 로드 밸런싱 기능까지 제공 해줍니다.

Istio 주요 Mode: Sidecar의 주요 특징

- 먼저 Sidecar는 원래 오토바이 옆에 부착된 보조 좌석을 의미합니다. 이 개념에서 Istio의 Sidecar는 Micro Service 옆에서 동작하는 보조 역할을 합니다.

- 각 서비스마다 Envoy 프록시가 Sidecar로 동작하며, 모든 트래픽을 가로채고 관리합니다. 서비스 간의 통신은 이 프록시를 통해 이루어지며, 이를 통해 트래픽 관리, 로깅, 인증, 보안 등이 쉽게 적용됩니다.

- 이 방식의 장점은 Application Code 수정이 적고(물론 MSA화가 되어 있다는 가정하에) 네트워크 관련 로직 또한 Envoy 프록시를 통해 관리 할 수 있습니다.

- Envoy 프록시 이미지의 버전관리가 따로 되며, 다양한 Service Mesh 툴에서도 사용하기 때문에 보편적으로 사용되어서 정보 및 자료가 많습니다.

테스트 환경 구성

- Cloudformation 배포 및 상태 확인

$ aws cloudformation deploy --template-file kans-7w.yaml --stack-name mylab --parameter-overrides KeyName=aws-key-pair SgIngressSshCidr=x.x.x.x/32 --region ap-northeast-2

Waiting for changeset to be created..

Waiting for stack create/update to complete

Successfully created/updated stack - mylab

$ while true; do

date

AWS_PAGER="" aws cloudformation list-stacks \

--stack-status-filter CREATE_IN_PROGRESS CREATE_COMPLETE CREATE_FAILED DELETE_IN_PROGRESS DELETE_FAILED \

--query "StackSummaries[*].{StackName:StackName, StackStatus:StackStatus}" \

--output table

sleep 1

done

2024년 10월 14일 월요일 13시 38분 58초 KST

----------------------------------

| ListStacks |

+------------+-------------------+

| StackName | StackStatus |

+------------+-------------------+

| mylab | CREATE_COMPLETE |

+------------+-------------------+

$ **aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text**

다른서버1 None running

다른서버2 공인IP running

다른서버3 None running

k3s-s 52.79.67.234 running

k3s-w2 3.36.109.52 running

k3s-w1 3.35.166.8 running

testpc 3.38.192.246 running2. Envoy란

- Istio로 생성되는 Application의 연결통로로 Istio Cluster의 Service Mesh 영역에 있는 서비스는 모두 각 Application의 Sidecar인 Envoy proxy를 통해 통신하게 됩니다.

- 그래서 트래픽제한, 모니터링 또한 Envoy Container를 체크하고 관리하게 됩니다.

- Istio뿐만 아니라 다양한 ServiceMesh 서비스의 중점이 되는 기술입니다.

먼저 Istio 설치 전 envoy를 설치하고 간단하게 기능 테스트를 해보겠습니다.

- testpc에 envoy 설치

$ wget -O- https://apt.envoyproxy.io/signing.key | sudo gpg --dearmor -o /etc/apt/keyrings/envoy-keyring.gpg

$ echo "deb [signed-by=/etc/apt/keyrings/envoy-keyring.gpg] https://apt.envoyproxy.io focal main" | sudo tee /etc/apt/sources.list.d/envoy.list

$ sudo apt-get update && sudo apt-get install envoy -y

# 확인

$ envoy --version

envoy version: cc4a75482810de4b84c301d13deb551bd3147339/1.31.2/Clean/RELEASE/BoringSSL

# 도움말

$ envoy --help

USAGE:

envoy [--stats-tag <string>] ... [--enable-core-dump] [--socket-mode

<string>] [--socket-path <string>] [--disable-extensions

<string>] [--cpuset-threads] [--enable-mutex-tracing]

[--disable-hot-restart] [--mode <string>]

[--parent-shutdown-time-s <uint32_t>] [--drain-strategy <string>]

[--drain-time-s <uint32_t>] [--file-flush-interval-msec

<uint32_t>] [--service-zone <string>] [--service-node <string>]

[--service-cluster <string>] [--hot-restart-version]

[--restart-epoch <uint32_t>] [--log-path <string>]

[--enable-fine-grain-logging] [--log-format-escaped]

[--log-format <string>] [--component-log-level <string>] [-l

<string>] [--local-address-ip-version <string>]

[--admin-address-path <string>] [--ignore-unknown-dynamic-fields]

[--reject-unknown-dynamic-fields] [--allow-unknown-static-fields]

[--allow-unknown-fields] [--config-yaml <string>] [-c <string>]

[--concurrency <uint32_t>] [--base-id-path <string>]

[--skip-hot-restart-parent-stats]

[--skip-hot-restart-on-no-parent] [--use-dynamic-base-id]

[--base-id <uint32_t>] [--] [--version] [-h]\

- envoy proxy 실습

# demo 설정 실행

$ curl -O https://www.envoyproxy.io/docs/envoy/latest/_downloads/92dcb9714fb6bc288d042029b34c0de4/**envoy-demo.yaml**

envoy -c envoy-demo.yaml

...

[2024-10-14 14:27:02.501][3325][info][upstream] [source/common/upstream/cluster_manager_impl.cc:249] cm init: all clusters initialized

[2024-10-14 14:27:02.501][3325][info][main] [source/server/server.cc:958] all clusters initialized. initializing init manager

[2024-10-14 14:27:02.501][3325][info][config] [source/common/listener_manager/listener_manager_impl.cc:930] all dependencies initialized. starting workers

# 정보 확인

$ ss -tnltp | grep -v 22

State Recv-Q Send-Q Local Address:Port Peer Address:PortProcess

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=346,fd=14))

LISTEN 0 4096 0.0.0.0:10000 0.0.0.0:* users:(("envoy",pid=3325,fd=25))

LISTEN 0 4096 0.0.0.0:10000 0.0.0.0:* users:(("envoy",pid=3325,fd=24))

LISTEN 0 511 *:80 *:* users:(("apache2",pid=2389,fd=4),("apache2",pid=2388,fd=4),("apache2",pid=2386,fd=4))

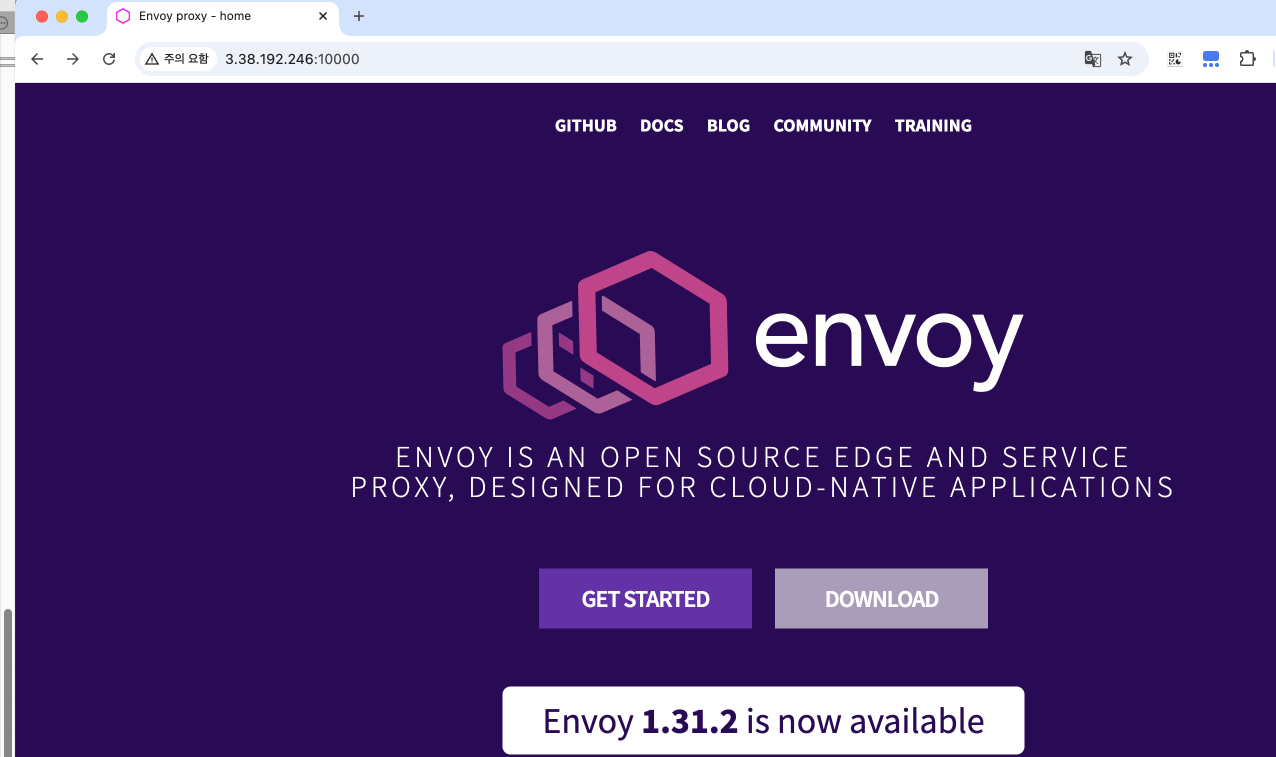

#접속 테스트

$ curl -s http://127.0.0.1:10000 | grep -o "<title>.*</title>"

<title>Envoy proxy - home</title>

# log에 기록됨

[2024-10-14T05:29:16.594Z] "GET / HTTP/1.1" 200 - 0 15596 371 296 "-" "curl/7.81.0" "d1f8f867-df28-4c1c-bfe7-455389f13521" "www.envoyproxy.io" "52.74.166.77:443"

# 외부 접속 확인

$ echo -e "http://$(curl -s ipinfo.io/ip):10000"

http://3.38.192.246:10000

# k3s-s 에서 접속 테스트

$ curl -s http://192.168.10.200:10000 | grep -o "<title>.*</title>"

$ ss -tnp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57031 users:(("envoy",pid=3325,fd=37))

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57034 users:(("envoy",pid=3325,fd=40))

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57035 users:(("envoy",pid=3325,fd=43))

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57028 users:(("envoy",pid=3325,fd=22))

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57029 users:(("envoy",pid=3325,fd=34))

ESTAB 0 0 192.168.10.200:10000 220.118.41.211:57033 users:(("envoy",pid=3325,fd=39))

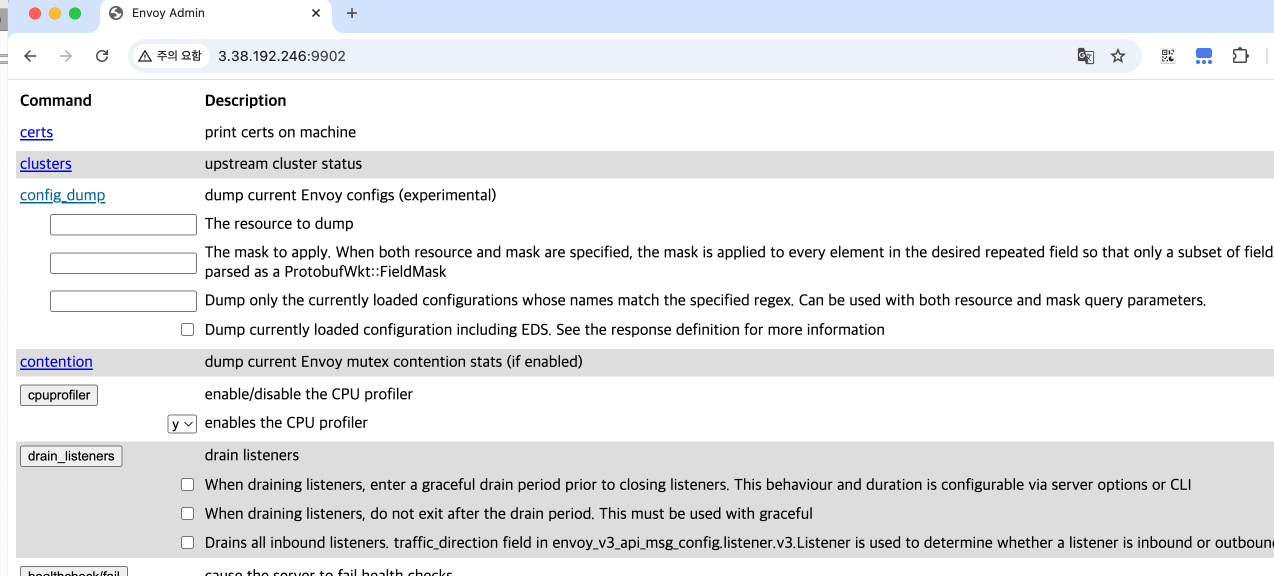

# 관리자페이지 적용 후 재배포

$ cat <<EOT> envoy-override.yaml

**admin**:

address:

socket_address:

address: **0.0.0.0**

port_value: **9902**

EOT

envoy -c envoy-demo.yaml --config-yaml "$(cat envoy-override.yaml)"

#다시 접근 테스트

$ echo -e "http://$(curl -s ipinfo.io/ip):9902"

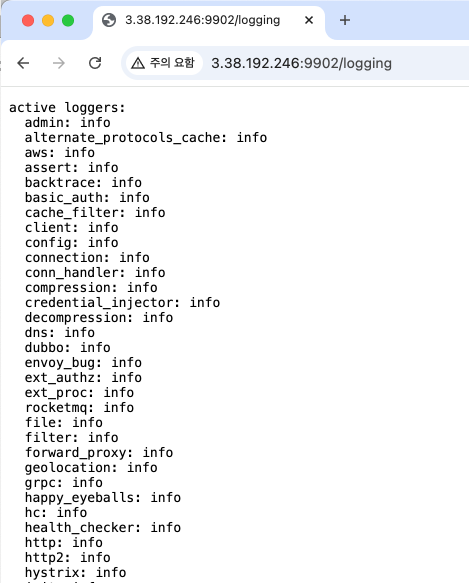

http://3.38.192.246:9902- 로컬에서 10000 port로 접근한 결과

- 로컬에서 관리자 페이지(9902)로 접근한 결과

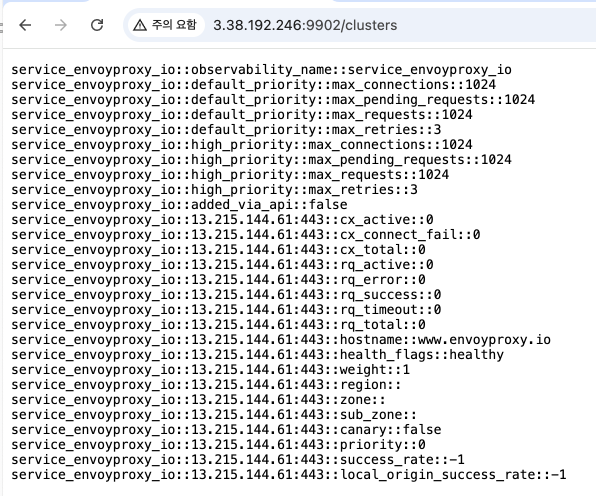

- Cluster 페이지 접근

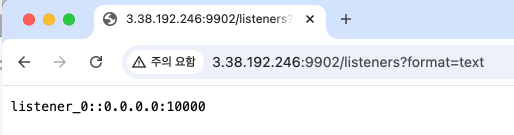

- listernes 페이지 접근

- logging 페이지 접근

- envoy 테스트 결과

- envoy를 간단하게 ec2에서 띄어보고 페이지를 로컬 또는 다른 ec2에서 접근해봤습니다.

- 결과적으로는 외부 접근, 내부 접근이 열려있는 상황이라 큰 차이는 없는 것을 확인할 수 있습니다.

- 추가로 envoy에서는 다양한 관리페이지를 지원하며 해당 페이지별로 logging 설정은 어떤식으로 되어 있는지, envoy 서비스의 global 설정은 어떠하게 정의되어 있는지 확인 할 수 있었습니다.

3. Istio 설치

- Istio CLI 및 kubernetes에 배포

- istio는 cli로 여러동작을 지원하기 때문에 istio를 배포하고 관리하기 위해서는 cli 테스트를 권고합니다.

**# istioctl 설치**

$ export **ISTIOV=1.23.2

$ echo "**export **ISTIOV=1.23.2" >> /etc/profile

$ curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV TARGET_ARCH=x86_64 sh -

Downloading istio-1.23.2 from https://github.com/istio/istio/releases/download/1.23.2/istio-1.23.2-linux-amd64.tar.gz ...

Istio 1.23.2 Download Complete!

Istio has been successfully downloaded into the istio-1.23.2 folder on your system.

Next Steps:

See https://istio.io/latest/docs/setup/install/ to add Istio to your Kubernetes cluster.

To configure the istioctl client tool for your workstation,

add the /root/istio-1.23.2/bin directory to your environment path variable with:

export PATH="$PATH:/root/istio-1.23.2/bin"

Begin the Istio pre-installation check by running:

istioctl x precheck

Need more information? Visit https://istio.io/latest/docs/setup/install/**

$ tree istio-$ISTIOV -L 2 # sample yaml 포함

istio-1.23.2

├── LICENSE

├── README.md

├── bin

│ └── istioctl

├── manifest.yaml

├── manifests

│ ├── charts

│ └── profiles

├── samples

│ ├── README.md

│ ├── addons

│ ├── ambient-argo

│ ├── bookinfo

│ ├── builder

│ ├── certs

│ ├── cicd

│ ├── custom-bootstrap

│ ├── extauthz

│ ├── external

│ ├── grpc-echo

│ ├── health-check

│ ├── helloworld

│ ├── httpbin

│ ├── jwt-server

│ ├── kind-lb

│ ├── multicluster

│ ├── open-telemetry

│ ├── operator

│ ├── ratelimit

│ ├── security

│ ├── sleep

│ ├── tcp-echo

│ ├── wasm_modules

│ └── websockets

└── tools

├── _istioctl

├── certs

└── istioctl.bash

31 directories, 7 files

$ **cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl**

$ istioctl version --remote=false

client version: 1.23.2

# (default 프로파일) 컨트롤 플레인 배포 - [링크](https://istio.io/latest/docs/setup/additional-setup/config-profiles/) [Customizing](https://istio.io/latest/docs/setup/additional-setup/customize-installation/)

# The istioctl command supports the full IstioOperator API via command-line options for individual settings or for passing a yaml file containing an IstioOperator custom resource (CR).

$ istioctl profile list

Istio configuration profiles:

ambient

default

demo

empty

minimal

openshift

openshift-ambient

preview

remote

stable

$ istioctl profile dump **default**

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

components:

base:

enabled: true

egressGateways:

- enabled: false

name: istio-egressgateway

ingressGateways:

- enabled: true

name: istio-ingressgateway

pilot:

enabled: true

hub: docker.io/istio

profile: default

tag: 1.23.2

values:

defaultRevision: ""

gateways:

istio-egressgateway: {}

istio-ingressgateway: {}

global:

configValidation: true

istioNamespace: istio-system

$ istioctl profile dump --config-path components.ingressGateways

.ingressGateways

- enabled: true

name: istio-ingressgateway

$ istioctl profile dump --config-path values.gateways.istio-ingressgateway

{}

$ istioctl profile dump **demo**

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

components:

base:

enabled: true

egressGateways:

- enabled: true

name: istio-egressgateway

ingressGateways:

- enabled: true

name: istio-ingressgateway

pilot:

enabled: true

hub: docker.io/istio

profile: demo

tag: 1.23.2

values:

defaultRevision: ""

gateways:

istio-egressgateway: {}

istio-ingressgateway: {}

global:

configValidation: true

istioNamespace: istio-system

profile: demo

$ istioctl profile dump demo > demo-profile.yaml

$ vi demo-profile.yaml # 복잡성을 줄이게 실습 시나리오 환경 맞춤

--------------------

egressGateways:

- enabled: **false**

--------------------

$ **istioctl install -f demo-profile.yaml

|\

| \

| \

| \

/|| \

/ || \

/ || \

/ || \

/ || \

/ || \

/______||__________\

____________________

\__ _____/

\_____/

This will install the Istio 1.23.2 "demo" profile (with components: Istio core, Istiod, and Ingress gateways) into the cluster. Proceed? (y/N) y**

*✔ Istio core installed ⛵️

✔ Istiod installed 🧠

✔ Ingress gateways installed 🛬

✔ Installation complete*

Made this installation the default for cluster-wide operations.

# 설치 확인 : istiod, istio-ingressgateway

$ kubectl get all,**svc**,ep,sa,cm,secret -n istio-system

NAME READY STATUS RESTARTS AGE

pod/istio-ingressgateway-5f9f654d46-mc6xq 1/1 Running 0 45s

pod/istiod-7f8b586864-xmj9z 1/1 Running 0 61s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/istio-ingressgateway LoadBalancer 10.10.200.158 192.168.10.10,192.168.10.101,192.168.10.102 15021:32608/TCP,80:31355/TCP,443:31041/TCP,31400:30436/TCP,15443:32650/TCP 45s

service/istiod ClusterIP 10.10.200.74 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 61s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/istio-ingressgateway 1/1 1 1 45s

deployment.apps/istiod 1/1 1 1 61s

NAME DESIRED CURRENT READY AGE

replicaset.apps/istio-ingressgateway-5f9f654d46 1 1 1 45s

replicaset.apps/istiod-7f8b586864 1 1 1 61s

NAME ENDPOINTS AGE

endpoints/istio-ingressgateway 172.16.2.4:15443,172.16.2.4:15021,172.16.2.4:31400 + 2 more... 46s

endpoints/istiod 172.16.2.2:15012,172.16.2.2:15010,172.16.2.2:15017 + 1 more... 62s

NAME SECRETS AGE

serviceaccount/default 0 64s

serviceaccount/istio-ingressgateway-service-account 0 46s

serviceaccount/istio-reader-service-account 0 63s

serviceaccount/istiod 0 62s

NAME DATA AGE

configmap/istio 2 62s

configmap/istio-ca-root-cert 1 48s

configmap/istio-gateway-status-leader 0 49s

configmap/istio-leader 0 49s

configmap/istio-namespace-controller-election 0 48s

configmap/istio-sidecar-injector 2 62s

configmap/kube-root-ca.crt 1 64s

NAME TYPE DATA AGE

secret/istio-ca-secret istio.io/ca-root 5 49s

$ **kubectl get crd | grep istio.io | sort**

authorizationpolicies.security.istio.io 2024-10-14T06:04:13Z

destinationrules.networking.istio.io 2024-10-14T06:04:13Z

envoyfilters.networking.istio.io 2024-10-14T06:04:13Z

gateways.networking.istio.io 2024-10-14T06:04:13Z

peerauthentications.security.istio.io 2024-10-14T06:04:13Z

proxyconfigs.networking.istio.io 2024-10-14T06:04:13Z

requestauthentications.security.istio.io 2024-10-14T06:04:13Z

serviceentries.networking.istio.io 2024-10-14T06:04:13Z

sidecars.networking.istio.io 2024-10-14T06:04:14Z

telemetries.telemetry.istio.io 2024-10-14T06:04:14Z

virtualservices.networking.istio.io 2024-10-14T06:04:14Z

wasmplugins.extensions.istio.io 2024-10-14T06:04:14Z

workloadentries.networking.istio.io 2024-10-14T06:04:14Z

workloadgroups.networking.istio.io 2024-10-14T06:04:14Z

# istio-ingressgateway 의 envoy 버전 확인

$ **kubectl exec -it deploy/istio-ingressgateway -n istio-system -c istio-proxy -- envoy --version**

envoy version: 6c72b2179f5a58988b920a55b0be8346de3f7b35/**1.31.2**-dev/Clean/RELEASE/BoringSSL

# istio-ingressgateway 서비스 NodePort로 변경

$ kubectl patch **svc** -n istio-system **istio-ingressgateway** -p '{"spec":{"type":"NodePort"}}'

service/istio-ingressgateway patched

# istio-ingressgateway 서비스 확인

$ **kubectl get svc,ep -n istio-system istio-ingressgateway**

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/istio-ingressgateway NodePort 10.10.200.158 <none> 15021:32608/TCP,80:31355/TCP,443:31041/TCP,31400:30436/TCP,15443:32650/TCP 102s

NAME ENDPOINTS AGE

endpoints/istio-ingressgateway 172.16.2.4:15443,172.16.2.4:15021,172.16.2.4:31400 + 2 more... 102s

## istio-ingressgateway 서비스 포트 정보 확인

$ **kubectl get svc -n istio-system istio-ingressgateway -o jsonpath={.spec.ports[*]} | jq**

{

"name": "status-port",

"nodePort": 32608,

"port": 15021,

"protocol": "TCP",

"targetPort": 15021

}

{

"name": "http2",

"nodePort": 31355,

"port": 80,

"protocol": "TCP",

"targetPort": 8080

}

{

"name": "https",

"nodePort": 31041,

"port": 443,

"protocol": "TCP",

"targetPort": 8443

}

{

"name": "tcp",

"nodePort": 30436,

"port": 31400,

"protocol": "TCP",

"targetPort": 31400

}

{

"name": "tls",

"nodePort": 32650,

"port": 15443,

"protocol": "TCP",

"targetPort": 15443

}

## istio-ingressgateway 디플로이먼트 파드의 포트 정보 확인

$ **kubectl get deploy/istio-ingressgateway -n istio-system -o jsonpath={.spec.template.spec.containers[0].ports[*]} | jq

{

"containerPort": 15021,

"protocol": "TCP"

}

{

"containerPort": 8080,

"protocol": "TCP"

}

{

"containerPort": 8443,

"protocol": "TCP"

}

{

"containerPort": 31400,

"protocol": "TCP"

}

{

"containerPort": 15443,

"protocol": "TCP"

}

{

"containerPort": 15090,

"name": "http-envoy-prom",

"protocol": "TCP"

}**

$ **kubectl get deploy/istio-ingressgateway -n istio-system -o jsonpath={.spec.template.spec.containers[0].readinessProbe} | jq

{

"failureThreshold": 30,

"httpGet": {

"path": "/healthz/ready",

"port": 15021,

"scheme": "HTTP"

},

"initialDelaySeconds": 1,

"periodSeconds": 2,

"successThreshold": 1,

"timeoutSeconds": 1

}**

# istiod 디플로이먼트 정보 확인

$ kubectl exec -it deployment.apps/istiod -n istio-system -- ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 127.0.0.1:9876 0.0.0.0:* users:(("pilot-discovery",pid=1,fd=8))

LISTEN 0 4096 *:8080 *:* users:(("pilot-discovery",pid=1,fd=3))

LISTEN 0 4096 *:15010 *:* users:(("pilot-discovery",pid=1,fd=11))

LISTEN 0 4096 *:15012 *:* users:(("pilot-discovery",pid=1,fd=10))

LISTEN 0 4096 *:15014 *:* users:(("pilot-discovery",pid=1,fd=9))

LISTEN 0 4096 *:15017 *:* users:(("pilot-discovery",pid=1,fd=12))

$ kubectl exec -it deployment.apps/istiod -n istio-system -- ss -tnp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 172.16.2.2:56766 10.10.200.1:443 users:(("pilot-discovery",pid=1,fd=7))

ESTAB 0 0 [::ffff:172.16.2.2]:15012 [::ffff:172.16.2.4]:40532 users:(("pilot-discovery",pid=1,fd=14))

ESTAB 0 0 [::ffff:172.16.2.2]:15012 [::ffff:172.16.2.4]:40534 users:(("pilot-discovery",pid=1,fd=15))

$ kubectl exec -it deployment.apps/**istiod** -n istio-system -- **ps -ef**

UID PID PPID C STIME TTY TIME CMD

istio-p+ 1 0 0 05:27 ? 00:00:07 /usr/local/bin/**pilot-discovery** discovery --monitoringAddr=:15014 --log_output_l

# istio-ingressgateway 디플로이먼트 정보 확인

$ kubectl exec -it deployment.apps/istio-ingressgateway -n istio-system -- ss -**tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:PortProcess

LISTEN 0 4096 127.0.0.1:15004 0.0.0.0:* users:(("pilot-agent",pid=1,fd=12))

LISTEN 0 4096 127.0.0.1:15000 0.0.0.0:* users:(("envoy",pid=15,fd=18))

LISTEN 0 4096 0.0.0.0:15021 0.0.0.0:* users:(("envoy",pid=15,fd=23))

LISTEN 0 4096 0.0.0.0:15021 0.0.0.0:* users:(("envoy",pid=15,fd=22))

LISTEN 0 4096 0.0.0.0:15090 0.0.0.0:* users:(("envoy",pid=15,fd=21))

LISTEN 0 4096 0.0.0.0:15090 0.0.0.0:* users:(("envoy",pid=15,fd=20))

LISTEN 0 4096 *:15020 *:* users:(("pilot-agent",pid=1,fd=3))**

$ kubectl exec -it deployment.apps/istio-ingressgateway -n istio-system -- ss -tnp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

ESTAB 0 0 127.0.0.1:59484 127.0.0.1:15020 users:(("envoy",pid=15,fd=36))

ESTAB 0 0 172.16.2.4:40532 10.10.200.74:15012 users:(("pilot-agent",pid=1,fd=9))

ESTAB 0 0 127.0.0.1:54094 127.0.0.1:15020 users:(("envoy",pid=15,fd=34))

ESTAB 0 0 172.16.2.4:40534 10.10.200.74:15012 users:(("pilot-agent",pid=1,fd=14))

ESTAB 0 0 [::ffff:127.0.0.1]:15020 [::ffff:127.0.0.1]:59484 users:(("pilot-agent",pid=1,fd=18))

ESTAB 0 0 [::ffff:127.0.0.1]:15020 [::ffff:127.0.0.1]:54094 users:(("pilot-agent",pid=1,fd=16))

$ kubectl exec -it deployment.apps/**istio-ingressgateway** -n istio-system -- **ps -ef**

istio-p+ 1 0 0 05:27 ? 00:00:01 /usr/local/bin**/pilot-agent** proxy router --domain istio-system.svc.cluster.local

istio-p+ 15 1 0 05:27 ? 00:00:11 /usr/local/bin/**envoy** -c etc/istio/proxy/envoy-rev.json --drain-time-s 45 --drai

$ kubectl exec -it deployment.apps/istio-ingressgateway -n istio-system -- cat /etc/istio/proxy/envoy-rev.json

{

"application_log_config": {

"log_format": {

"text_format": "%Y-%m-%dT%T.%fZ\t%l\tenvoy %n %g:%#\t%v\tthread=%t"

}

},

"node": {

"id": "router~172.16.2.4~istio-ingressgateway-5f9f654d46-mc6xq.istio-system~istio-system.svc.cluster.local",

"cluster": "istio-ingressgateway.istio-system",

"locality": {

},

"metadata": {"ANNOTATIONS":{"istio.io/rev":"default","kubernetes.io/config.seen":"2024-10-14T15:04:31.773107672+09:00","kubernetes.io/config.source":"api","prometheus.io/path":"/stats/prometheus","prometheus.io/port":"15020","prometheus.io/scrape":"true","sidecar.istio.io/inject":"false"},"CLUSTER_ID":"Kubernetes","ENVOY_PROMETHEUS_PORT":15090,"ENVOY_STATUS_PORT":15021,"INSTANCE_IPS":"172.16.2.4","ISTIO_PROXY_SHA":"6c72b2179f5a58988b920a55b0be8346de3f7b35","ISTIO_VERSION":"1.23.2","LABELS":{"app":"istio-ingressgateway","chart":"gateways","heritage":"Tiller","install.operator.istio.io/owning-resource":"unknown","istio":"ingressgateway","istio.io/rev":"default","operator.istio.io/component":"IngressGateways","release":"istio","service.istio.io/canonical-name":"istio-ingressgateway","service.istio.io/canonical-revision":"latest","sidecar.istio.io/inject":"false"},"MESH_ID":"cluster.local","NAME":"istio-ingressgateway-5f9f654d46-mc6xq","NAMESPACE":"istio-system","NODE_NAME":"k3s-w1","OWNER":"kubernetes://apis/apps/v1/namespaces/istio-system/deployments/istio-ingressgateway","PILOT_SAN":["istiod.istio-system.svc"],"PROXY_CONFIG":{"binaryPath":"/usr/local/bin/envoy","concurrency":2,"configPath":"./etc/istio/proxy","controlPlaneAuthPolicy":"MUTUAL_TLS","discoveryAddress":"istiod.istio-system.svc:15012","drainDuration":"45s","proxyAdminPort":15000,"serviceCluster":"istio-proxy","statNameLength":189,"statusPort":15020,"terminationDrainDuration":"5s"},"SERVICE_ACCOUNT":"istio-ingressgateway-service-account","UNPRIVILEGED_POD":"true","WORKLOAD_NAME":"istio-ingressgateway"}

},

...

$ kubectl exec -it deployment.apps/istio-ingressgateway -n istio-system -- ss -**xnlp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

u_str LISTEN 0 4096 var/run/secrets/workload-spiffe-uds/socket 42272 * 0 users:(("pilot-agent",pid=1,fd=8))

u_str LISTEN 0 4096 etc/istio/proxy/XDS 42273 * 0 users:(("pilot-agent",pid=1,fd=10))**

$ kubectl exec -it deployment.apps/istio-ingressgateway -n istio-system -- ss -xnp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

u_str ESTAB 0 0 * 40546 * 42280 users:(("envoy",pid=15,fd=19))

u_str ESTAB 0 0 etc/istio/proxy/XDS 42280 * 40546 users:(("pilot-agent",pid=1,fd=11))

u_str ESTAB 0 0 var/run/secrets/workload-spiffe-uds/socket 41289 * 40569 users:(("pilot-agent",pid=1,fd=15))

u_str ESTAB 0 0 * 40569 * 41289 users:(("envoy",pid=15,fd=32))- 위와 같이 Istio를 설치하면 각 component별로 demo yaml파일을 만드는 명령어를 제공하여 조금 더 쉽게 테스트 환경을 구성하고 참고할 수 있습니다.

- 배포가 완료되면 특정 서비스군의 helm과 같이 각 pod, svc, ep등과 추가로 crd까지 배포가 되고 저희는 워크플로우를 이해하고 사용만 하면 됩니다.

- 추가로 특정 Namespace에 실행되는 Application에 모두 envoy sidecar가 같이 올라가게 설정도 가능합니다.

$ kubectl label namespace default istio-injection=enabled

namespace/default labeled

$ kubectl get ns -L istio-injection

NAME STATUS AGE ISTIO-INJECTION

default Active 98m enabled

istio-system Active 10m

kube-node-lease Active 98m

kube-public Active 98m

kube-system Active 98m- istio 접속 테스트

# istio ingress gw NodePort(HTTP 접속용) 변수 지정

export IGWHTTP=$(kubectl get service -n istio-system istio-ingressgateway -o jsonpath='{.spec.ports[1].nodePort}')

echo $IGWHTTP

IGWHTTP=31355

## istio-ingressgateway 파드가 배치된 노드의 유동 공인 IP 확인

**aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text**

*k3s-s 3.38.151.222 running

k3s-w1 15.165.75.117 running

k3s-w2 3.39.223.99 running

testpc 54.180.243.135 running*

# /etc/hosts 파일 수정

MYDOMAIN=www.naver.com

export MYDOMAIN=www.naver.com

echo -e "192.168.10.10 $MYDOMAIN" >> /etc/hosts

echo -e "export MYDOMAIN=$MYDOMAIN" >> /etc/profile

# istio ingress gw 접속 테스트 : 아직은 설정이 없어서 접속 실패가 된다

curl -v -s $MYDOMAIN:$IGWHTTP4. Istio 외부 서비스 노출

- Istio는 Service mesh 솔루션이고 다양한 Application의 L7 라우팅부터 L3, L4까지 지원합니다.

- 이러한 Service mesh 영역을 외부에서 접근하기 위해서는 다양한 방법이 있습니다.

- Nginx 배포

$ kubectl get pod -n istio-system -l app=istiod

NAME READY STATUS RESTARTS AGE

istiod-7f8b586864-xmj9z 1/1 Running 0 21m

$ kubetail -n istio-system -l app=istiod -f

istiod-7f8b586864-xmj9z

$ kubetail -n istio-system -l app=istio-ingressgateway -f

istio-ingressgateway-5f9f654d46-mc6xq

$ cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-websrv

spec:

replicas: 1

selector:

matchLabels:

app: deploy-websrv

template:

metadata:

labels:

app: deploy-websrv

spec:

terminationGracePeriodSeconds: 0

containers:

- name: deploy-websrv

image: nginx:alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-clusterip

spec:

ports:

- name: svc-webport

port: 80

targetPort: 80

selector:

app: deploy-websrv

type: ClusterIP

EOF

deployment.apps/deploy-websrv created

service/svc-clusterip created

$ kubectl get pod,svc,ep -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-websrv-7d7cf8586c-crbb7 2/2 Running 0 44s 172.16.2.5 k3s-w1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 110m <none>

service/svc-clusterip ClusterIP 10.10.200.88 <none> 80/TCP 44s app=deploy-websrv

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.10.10:6443 110m

endpoints/svc-clusterip 172.16.2.5:80 44s

$ kc describe pod

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 24s default-scheduler Successfully assigned default/deploy-websrv-7d7cf8586c-crbb7 to k3s-w1

Normal Pulled 23s kubelet Container image "docker.io/istio/proxyv2:1.23.2" already present on machine

Normal Created 23s kubelet Created container istio-init

Normal Started 23s kubelet Started container istio-init

Normal Pulling 22s kubelet Pulling image "nginx:alpine"

Normal Pulled 17s kubelet Successfully pulled image "nginx:alpine" in 5.082s (5.082s including waiting). Image size: 20506631 bytes.

Normal Created 17s kubelet Created container deploy-websrv

Normal Started 17s kubelet Started container deploy-websrv

Normal Pulled 17s kubelet Container image "docker.io/istio/proxyv2:1.23.2" already present on machine

Normal Created 17s kubelet Created container istio-proxy

Normal Started 17s kubelet Started container istio-proxy- Istio Gateway/VirtualService 설정

$ cat <<EOF | kubectl create -f -

apiVersion: networking.istio.io/v1

kind: Gateway

metadata:

name: test-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*****"

---

apiVersion: networking.istio.io/v1

kind: VirtualService

metadata:

name: nginx-service

spec:

hosts:

- "www.naver.com"

gateways:

- test-gateway

http:

- route:

- destination:

host: svc-clusterip

port:

number: 80

EOF

$ kc explain gateways.networking.istio.io

GROUP: networking.istio.io

KIND: Gateway

VERSION: v1

DESCRIPTION:

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an object.

Servers should convert recognized schemas to the latest internal value, and

may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <ObjectMeta>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Configuration affecting edge load balancer. See more details at:

https://istio.io/docs/reference/config/networking/gateway.html

status <Object>

<no description>

$ kc explain virtualservices.networking.istio.io

GROUP: networking.istio.io

KIND: VirtualService

VERSION: v1

DESCRIPTION:

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an object.

Servers should convert recognized schemas to the latest internal value, and

may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <ObjectMeta>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Configuration affecting label/content routing, sni routing, etc. See more

details at:

https://istio.io/docs/reference/config/networking/virtual-service.html

status <Object>

<no description>

$ kubectl api-resources | grep istio

wasmplugins extensions.istio.io/v1alpha1 true WasmPlugin

destinationrules dr networking.istio.io/v1 true DestinationRule

envoyfilters networking.istio.io/v1alpha3 true EnvoyFilter

gateways gw networking.istio.io/v1 true Gateway

proxyconfigs networking.istio.io/v1beta1 true ProxyConfig

serviceentries se networking.istio.io/v1 true ServiceEntry

sidecars networking.istio.io/v1 true Sidecar

virtualservices vs networking.istio.io/v1 true VirtualService

workloadentries we networking.istio.io/v1 true WorkloadEntry

workloadgroups wg networking.istio.io/v1 true WorkloadGroup

authorizationpolicies ap security.istio.io/v1 true AuthorizationPolicy

peerauthentications pa security.istio.io/v1 true PeerAuthentication

requestauthentications ra security.istio.io/v1 true RequestAuthentication

telemetries telemetry telemetry.istio.io/v1 true Telemetry

# virtual service 는 다른 네임스페이스의 서비스(ex. svc-nn.<ns>)도 참조할 수 있다

$ kubectl get gw,vs

NAME AGE

gateway.networking.istio.io/test-gateway 3m11s

NAME GATEWAYS HOSTS AGE

virtualservice.networking.istio.io/nginx-service ["test-gateway"] ["www.naver.com"] 73s

# 외부 접근 테스트

$ curl -s www.naver.com:$IGWHTTP | grep -o "<title>.*</title>"

<title>Welcome to nginx!</title>

$ curl -s -v www.naver.com:$IGWHTTP

* Trying 192.168.10.102:31355...

* Connected to www.naver.com (192.168.10.102) port 31355 (#0)

> GET / HTTP/1.1

> Host: www.naver.com:31355

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< server: istio-envoy

< date: Mon, 14 Oct 2024 06:34:27 GMT

< content-type: text/html

< content-length: 615

< last-modified: Wed, 02 Oct 2024 16:07:39 GMT

< etag: "66fd6fcb-267"

< accept-ranges: bytes

< x-envoy-upstream-service-time: 1

#출력 로그 확인

$ kubetail -n istio-system -l app=istio-ingressgateway -f

istio-ingressgateway-5f9f654d46-mc6xq

$ kubetail -l app=deploy-websrv

deploy-websrv-7d7cf8586c-crbb7 deploy-websrv

deploy-websrv-7d7cf8586c-crbb7 istio-proxy

deploy-websrv-7d7cf8586c-crbb7 istio-init

- Istio Cluster내에 서비스를 노출시키면 진입점이 생기는 것일뿐이며 서비스 동작조차는 동일한 nginx 페이지를 호출하게 됩니다.

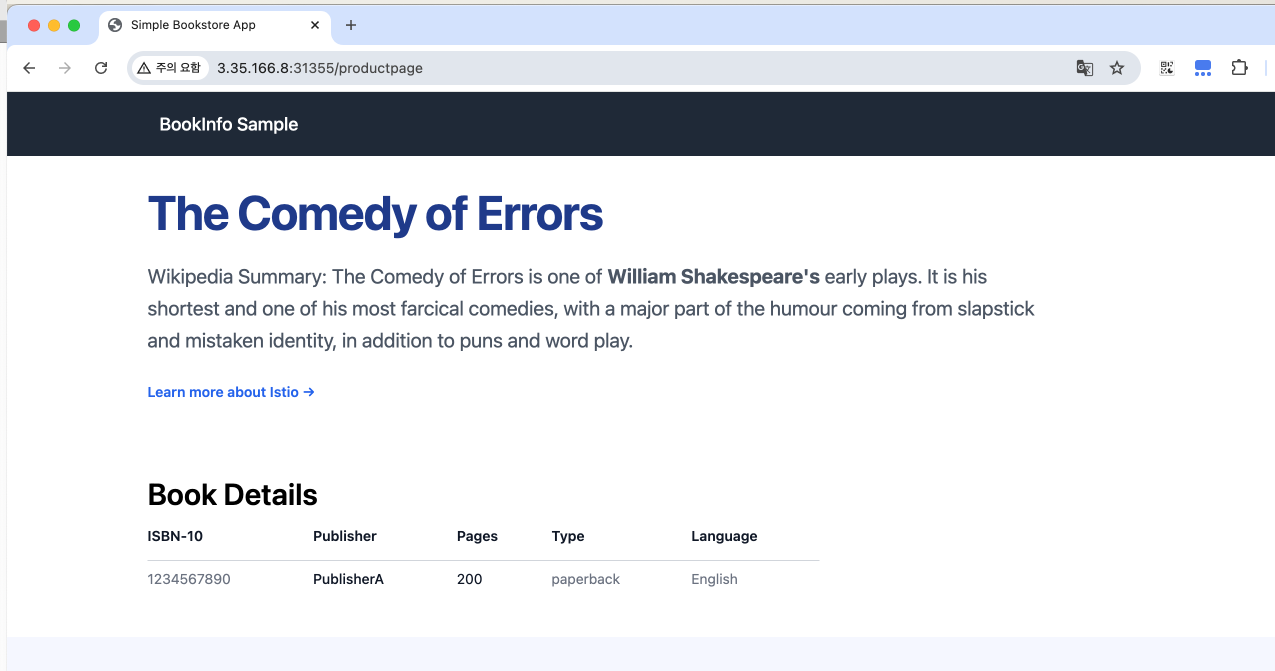

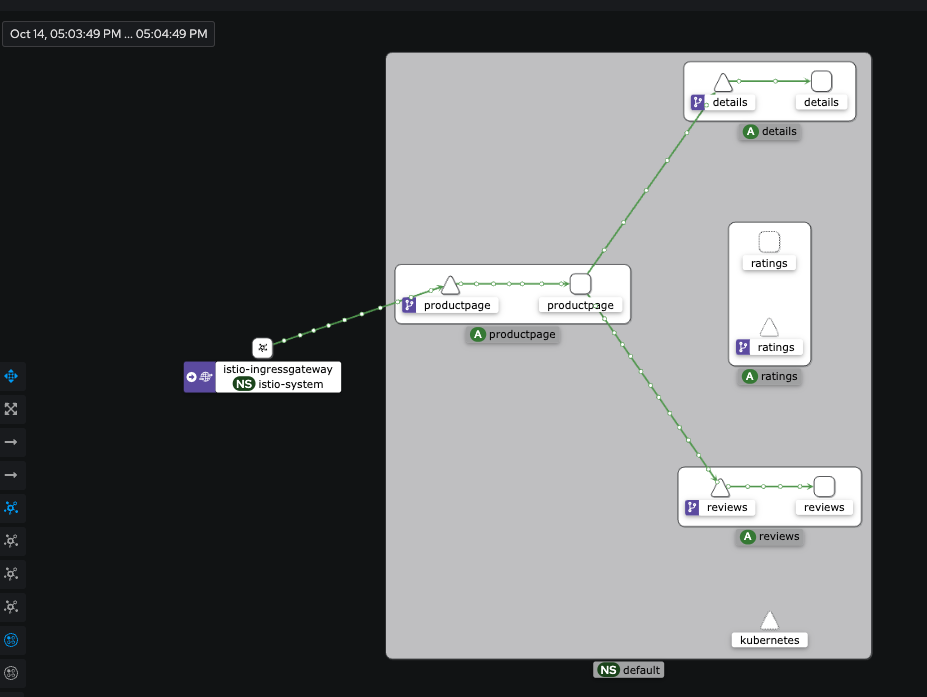

5. Istio BookInfo 실습

- Istio에 Hello World 급의 테스트환경은 Bookinfo라는 서비스가 있습니다. 해당 서비스는 4개의 MicroService로 구성되어 있습니다.

- ProductPage: 첫 메인 페이지로 여러 페이지를 접근할 수 있는 버튼이 존재합니다.

- Review: 책의 리뷰 페이지로 v1,2,3 세개의 버전이 있습니다.

- Details: 책의 디테일한 정보를 보여주는 페이지입니다.

- Ratings: 도서에 대한 평가를 가져오는 기능입니다.

- Application 배포

# 모니터링

$ watch -d 'kubectl get pod -owide;echo;kubectl get svc'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE R

EADINESS GATES

details-v1-65cfcf56f9-bbhck 2/2 Running 0 19s 172.16.2.6 k3s-w1 <none> <

none>

productpage-v1-d5789fdfb-xbvxm 0/2 PodInitializing 0 19s 172.16.0.5 k3s-s <none> <

none>

ratings-v1-7c9bd4b87f-mzhh2 2/2 Running 0 19s 172.16.2.7 k3s-w1 <none> <

none>

reviews-v1-6584ddcf65-b6fmb 0/2 PodInitializing 0 19s 172.16.1.4 k3s-w2 <none> <

none>

reviews-v2-6f85cb9b7c-6r59n 0/2 PodInitializing 0 19s 172.16.1.5 k3s-w2 <none> <

none>

reviews-v3-6f5b775685-5lzkv 2/2 Running 0 19s 172.16.2.8 k3s-w1 <none> <

none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

details ClusterIP 10.10.200.120 <none> 9080/TCP 19s

kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 5m23s

productpage ClusterIP 10.10.200.127 <none> 9080/TCP 19s

ratings ClusterIP 10.10.200.152 <none> 9080/TCP 19s

reviews ClusterIP 10.10.200.80 <none> 9080/TCP 19s

# Bookinfo 애플리케이션 배포

$ echo $ISTIOV

$ cat ~/istio-$ISTIOV/samples/bookinfo/platform/kube/bookinfo.yaml

$ kubectl apply -f ~/istio-$ISTIOV/samples/bookinfo/platform/kube/bookinfo.yaml

service/details created

serviceaccount/bookinfo-details created

deployment.apps/details-v1 created

service/ratings created

serviceaccount/bookinfo-ratings created

deployment.apps/ratings-v1 created

service/reviews created

serviceaccount/bookinfo-reviews created

deployment.apps/reviews-v1 created

deployment.apps/reviews-v2 created

deployment.apps/reviews-v3 created

service/productpage created

serviceaccount/bookinfo-productpage created

deployment.apps/productpage-v1 created

# 확인

$ kubectl get all,sa

NAME READY STATUS RESTARTS AGE

pod/details-v1-65cfcf56f9-bbhck 2/2 Running 0 59s

pod/productpage-v1-d5789fdfb-xbvxm 2/2 Running 0 59s

pod/ratings-v1-7c9bd4b87f-mzhh2 2/2 Running 0 59s

pod/reviews-v1-6584ddcf65-b6fmb 2/2 Running 0 59s

pod/reviews-v2-6f85cb9b7c-6r59n 2/2 Running 0 59s

pod/reviews-v3-6f5b775685-5lzkv 2/2 Running 0 59s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/details ClusterIP 10.10.200.120 <none> 9080/TCP 59s

service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 6m3s

service/productpage ClusterIP 10.10.200.127 <none> 9080/TCP 59s

service/ratings ClusterIP 10.10.200.152 <none> 9080/TCP 59s

service/reviews ClusterIP 10.10.200.80 <none> 9080/TCP 59s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/details-v1 1/1 1 1 59s

deployment.apps/productpage-v1 1/1 1 1 59s

deployment.apps/ratings-v1 1/1 1 1 59s

deployment.apps/reviews-v1 1/1 1 1 59s

deployment.apps/reviews-v2 1/1 1 1 59s

deployment.apps/reviews-v3 1/1 1 1 59s

NAME DESIRED CURRENT READY AGE

replicaset.apps/details-v1-65cfcf56f9 1 1 1 59s

replicaset.apps/productpage-v1-d5789fdfb 1 1 1 59s

replicaset.apps/ratings-v1-7c9bd4b87f 1 1 1 59s

replicaset.apps/reviews-v1-6584ddcf65 1 1 1 59s

replicaset.apps/reviews-v2-6f85cb9b7c 1 1 1 59s

replicaset.apps/reviews-v3-6f5b775685 1 1 1 59s

NAME SECRETS AGE

serviceaccount/bookinfo-details 0 60s

serviceaccount/bookinfo-productpage 0 60s

serviceaccount/bookinfo-ratings 0 60s

serviceaccount/bookinfo-reviews 0 60s

serviceaccount/default 0 162m

# product 웹 접속 확인

$ kubectl exec "$(kubectl get pod -l app=ratings -o jsonpath='{.items[0].metadata.name}')" -c ratings -- curl -sS productpage:9080/productpage | grep -o "<title>.*</title>"

<title>Simple Bookstore App</title>

# 로그

$ kubetail -l app=productpage -f

productpage-v1-d5789fdfb-xbvxm productpage

productpage-v1-d5789fdfb-xbvxm istio-proxy

productpage-v1-d5789fdfb-xbvxm istio-init- Bookinfo 인입설정

- Istio Gateway/VirtualService 설정

# Istio Gateway/VirtualService 설정

$ cat ~/istio-$ISTIOV/samples/bookinfo/networking/bookinfo-gateway.yaml

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: bookinfo-gateway

spec:

# The selector matches the ingress gateway pod labels.

# If you installed Istio using Helm following the standard documentation, this would be "istio=ingress"

selector:

istio: ingressgateway # use istio default controller

servers:

- port:

number: 8080

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: bookinfo

spec:

hosts:

- "*"

gateways:

- bookinfo-gateway

http:

- match:

- uri:

exact: /productpage

- uri:

prefix: /static

- uri:

exact: /login

- uri:

exact: /logout

- uri:

prefix: /api/v1/products

route:

- destination:

host: productpage

port:

number: 9080

$ kubectl apply -f ~/istio-$ISTIOV/samples/bookinfo/networking/bookinfo-gateway.yaml

# 확인

$ **kubectl get gw,vs

NAME AGE

gateway.networking.istio.io/bookinfo-gateway 35s

NAME GATEWAYS HOSTS AGE

virtualservice.networking.istio.io/bookinfo ["bookinfo-gateway"] ["*"] 35s**

$ **istioctl proxy-status

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

details-v1-65cfcf56f9-bbhck.default Kubernetes SYNCED (3m40s) SYNCED (3m40s) SYNCED (3m31s) SYNCED (3m40s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

istio-ingressgateway-5f9f654d46-mc6xq.istio-system Kubernetes SYNCED (47s) SYNCED (47s) SYNCED (3m31s) SYNCED (47s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

productpage-v1-d5789fdfb-xbvxm.default Kubernetes SYNCED (3m31s) SYNCED (3m31s) SYNCED (3m31s) SYNCED (3m31s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

ratings-v1-7c9bd4b87f-mzhh2.default Kubernetes SYNCED (3m39s) SYNCED (3m39s) SYNCED (3m31s) SYNCED (3m39s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

reviews-v1-6584ddcf65-b6fmb.default Kubernetes SYNCED (3m34s) SYNCED (3m34s) SYNCED (3m31s) SYNCED (3m34s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

reviews-v2-6f85cb9b7c-6r59n.default Kubernetes SYNCED (3m34s) SYNCED (3m34s) SYNCED (3m31s) SYNCED (3m34s) IGNORED istiod-7f8b586864-xmj9z 1.23.2

reviews-v3-6f5b775685-5lzkv.default Kubernetes SYNCED (3m38s) SYNCED (3m38s) SYNCED (3m31s) SYNCED (3m38s) IGNORED istiod-7f8b586864-xmj9z 1.23.2**

# productpage 파드의 istio-proxy 로그 확인 Access log 가 출력 - Default access log format : [링크](https://istio.io/latest/docs/tasks/observability/logs/access-log/#default-access-log-format)

$ kubetail -l app=productpage -c istio-proxy -f

productpage-v1-d5789fdfb-xbvxm

# NodePort로 접속 테스트

$ export IGWHTTP=$(kubectl get service -n istio-system istio-ingressgateway -o jsonpath='{.spec.ports[1].nodePort}')

$ echo $IGWHTTP

31355

# 접속 확인

kubectl get svc -n istio-system istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway NodePort 10.10.200.158 <none> 15021:32608/TCP,80:31355/TCP,443:31041/TCP,31400:30436/TCP,15443:32650/TCP 78m

$ curl -s http://**localhost**:$IGWHTTP/productpage

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Simple Bookstore App</title>

<script src="static/tailwind/tailwind.css"></script>

<script type="text/javascript">

window.addEventListener("DOMContentLoaded", (event) => {

const dialog = document.querySelector("dialog");

const showButton = document.querySelector("#sign-in-button");

const closeButton = document.querySelector("#close-dialog");

if (showButton) {

showButton.addEventListener("click", () => {

dialog.showModal();

});

}

if (closeButton) {

closeButton.addEventListener("click", () => {

dialog.close();

});

}

})

</script>

...

# host에 등록된 것으로 확인

$ curl -s -v http://www.naver.com:$IGWHTTP/productpage

* Trying 192.168.10.102:31355...

* Connected to www.naver.com (192.168.10.102) port 31355 (#0)

> GET /productpage HTTP/1.1

> Host: www.naver.com:31355

> User-Agent: curl/7.81.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< server: istio-envoy

< date: Mon, 14 Oct 2024 07:24:33 GMT

< content-type: text/html; charset=utf-8

< content-length: 9429

< vary: Cookie

< x-envoy-upstream-service-time: 23

<

<meta charset="utf-8">

<meta http-equiv="X-UA-Compatible" content="IE=edge">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

...

#로컬에서 확인

$ curl -v 3.35.166.8:31355/productpage

- 로컬에서 페이지 접근 확인

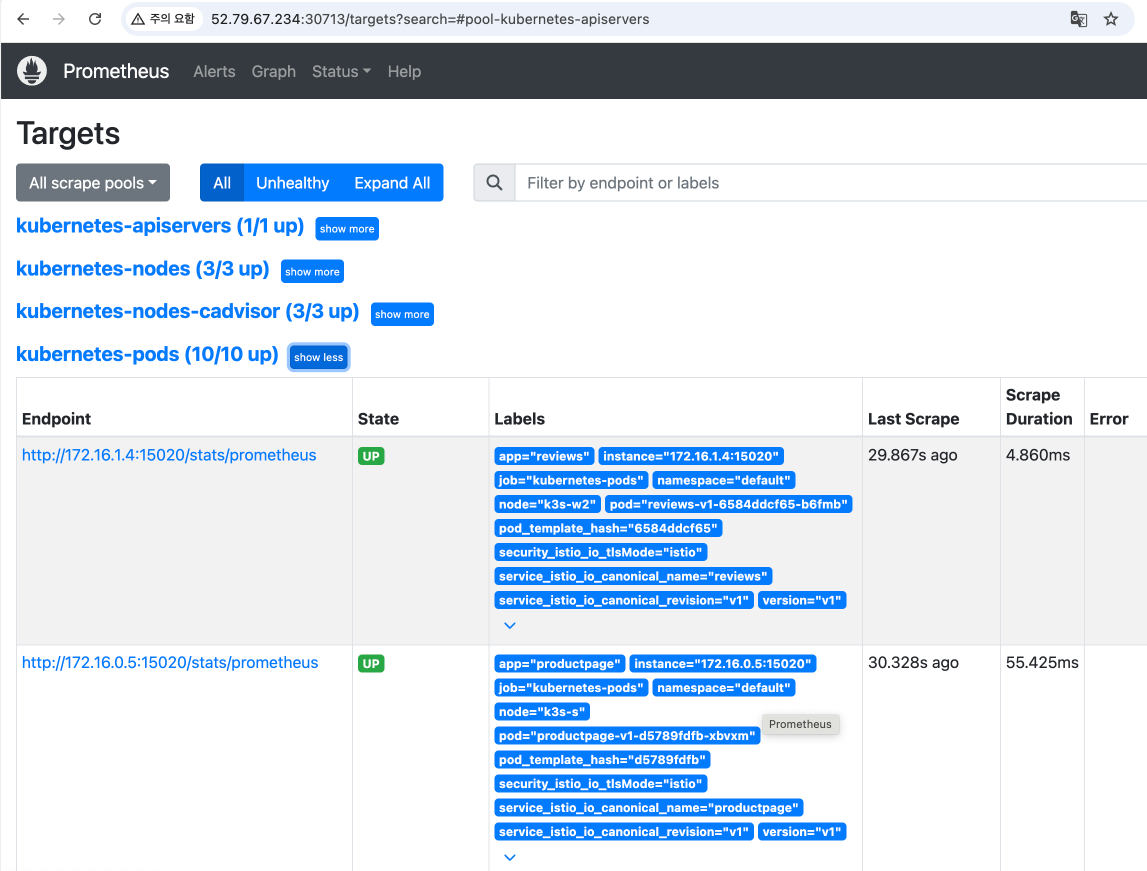

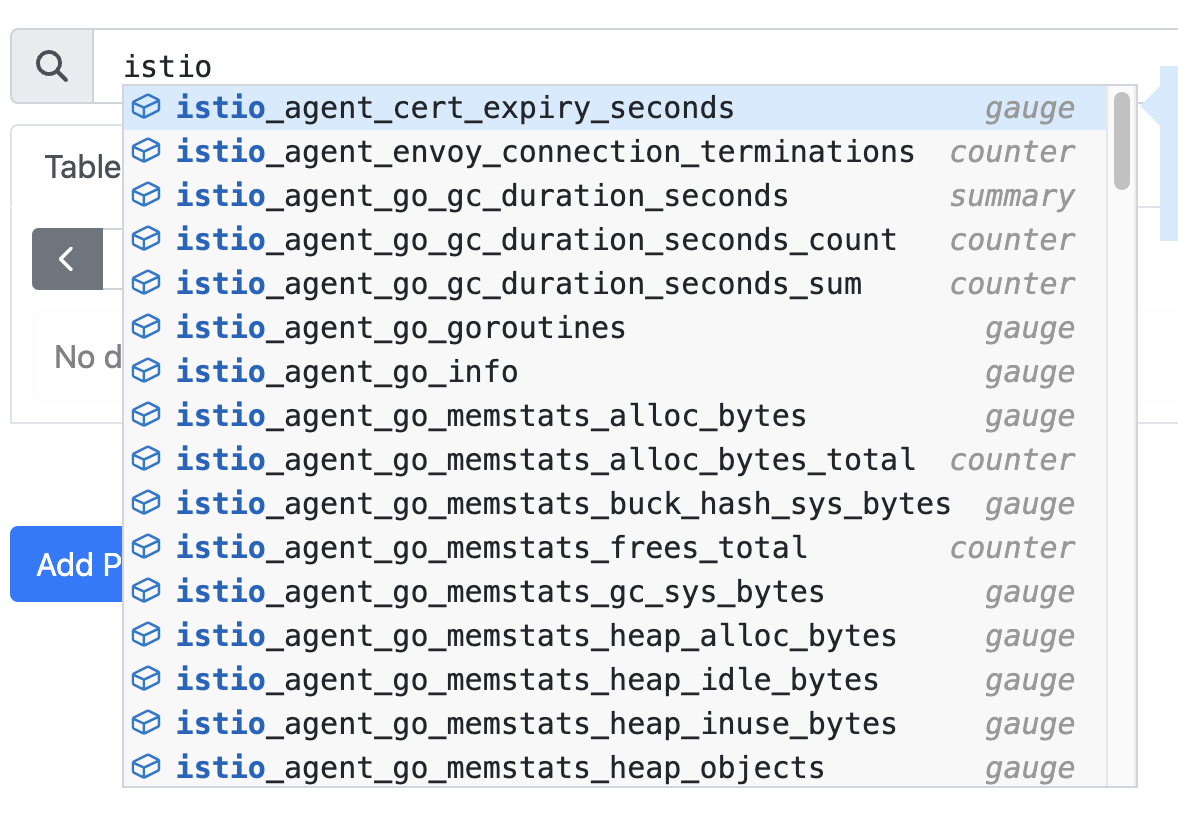

6. Istio 모니터링

Kiali는 Prometheus나 Jaeger를 연동하여 메트릭을 가져오고 대시보드에 애니메이션을 보여주는 모니터링 서비스입니다.

Kiali 대시보드 배포

# Install Kiali and the other addons and wait for them to be deployed. : Kiali dashboard, along with Prometheus, Grafana, and Jaeger.

$ tree ~/istio-$ISTIOV/samples/addons/

/root/istio-1.23.2/samples/addons/

├── README.md

├── extras

│ ├── prometheus-operator.yaml

│ ├── skywalking.yaml

│ └── zipkin.yaml

├── grafana.yaml

├── jaeger.yaml

├── kiali.yaml

├── loki.yaml

└── prometheus.yaml

$ kubectl apply -f ~/istio-$ISTIOV/samples/addons # 디렉터리에 있는 모든 yaml 자원을 생성

serviceaccount/grafana created

configmap/grafana created

service/grafana created

deployment.apps/grafana created

configmap/istio-grafana-dashboards created

configmap/istio-services-grafana-dashboards created

deployment.apps/jaeger created

service/tracing created

service/zipkin created

service/jaeger-collector created

serviceaccount/kiali created

configmap/kiali created

clusterrole.rbac.authorization.k8s.io/kiali created

clusterrolebinding.rbac.authorization.k8s.io/kiali created

role.rbac.authorization.k8s.io/kiali-controlplane created

rolebinding.rbac.authorization.k8s.io/kiali-controlplane created

service/kiali created

deployment.apps/kiali created

serviceaccount/loki created

configmap/loki created

configmap/loki-runtime created

service/loki-memberlist created

service/loki-headless created

service/loki created

statefulset.apps/loki created

serviceaccount/prometheus created

configmap/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

deployment.apps/prometheus created

$ kubectl rollout status deployment/kiali -n istio-system

Waiting for deployment "kiali" rollout to finish: 0 of 1 updated replicas are available...

# 확인

$ kubectl get all,sa,cm -n istio-system

$ kubectl get svc,ep -n istio-system

# kiali 서비스 변경

$ kubectl patch svc -n istio-system kiali -p '{"spec":{"type":"NodePort"}}'

service/kiali patched

# kiali 웹 접속 주소 확인

$ KIALINodePort=$(kubectl get svc -n istio-system kiali -o jsonpath={.spec.ports[0].nodePort})

$ echo -e "KIALI UI URL = http://$(curl -s ipinfo.io/ip):$KIALINodePort"

# Grafana 서비스 변경

$ kubectl patch svc -n istio-system grafana -p '{"spec":{"type":"NodePort"}}'

# Grafana 웹 접속 주소 확인 : 7개의 대시보드

$ GRAFANANodePort=$(kubectl get svc -n istio-system grafana -o jsonpath={.spec.ports[0].nodePort})

$ echo -e "Grafana URL = http://$(curl -s ipinfo.io/ip):$GRAFANANodePort"

# Prometheus 서비스 변경

$ kubectl patch svc -n istio-system prometheus -p '{"spec":{"type":"NodePort"}}'

# Prometheus 웹 접속 주소 확인

$ PROMENodePort=$(kubectl get svc -n istio-system prometheus -o jsonpath={.spec.ports[0].nodePort})

$ echo -e "Prometheus URL = http://$(curl -s ipinfo.io/ip):$PROMENodePort"- 프로메테우스의 Target의 pod별 15020 port 확인

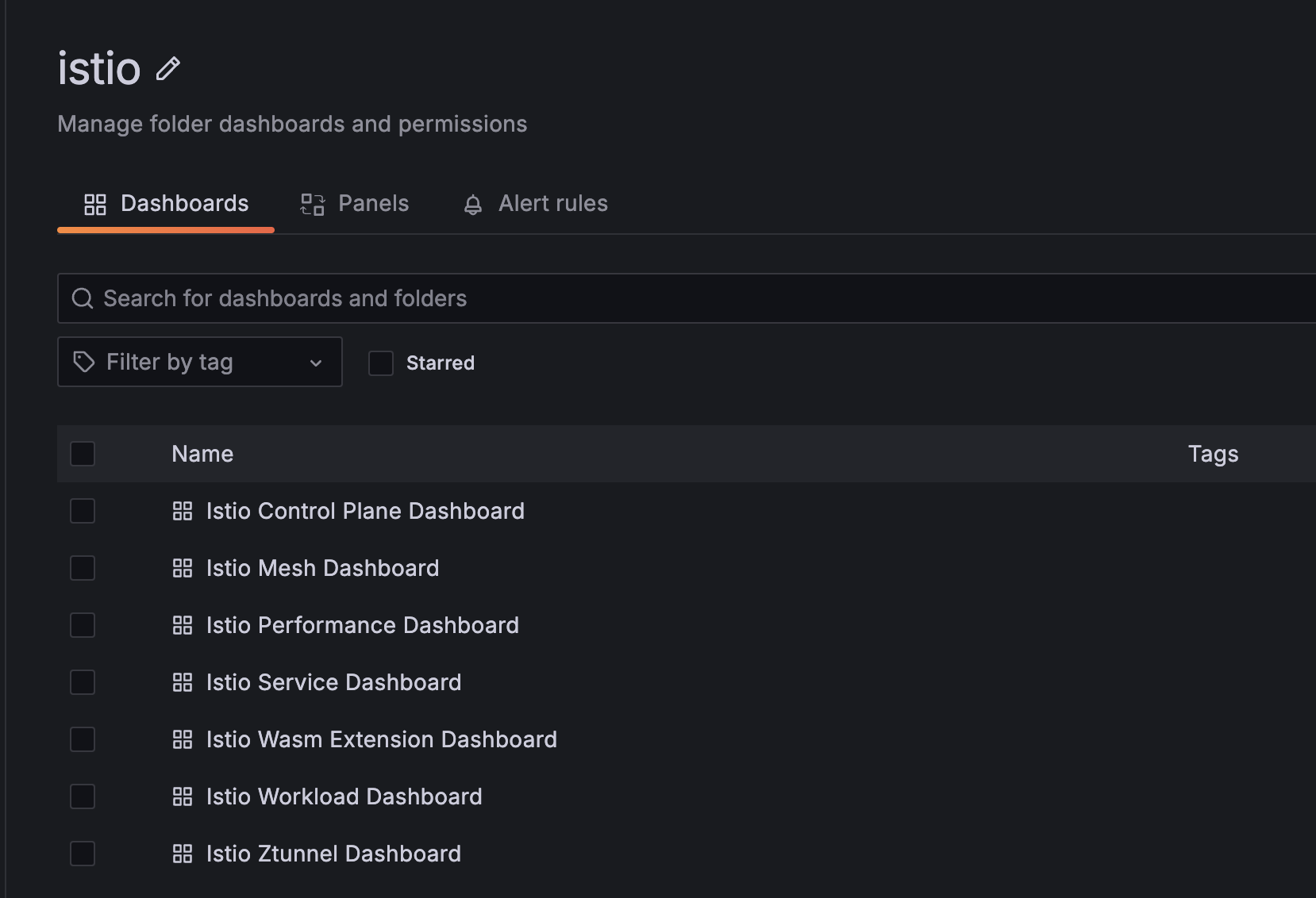

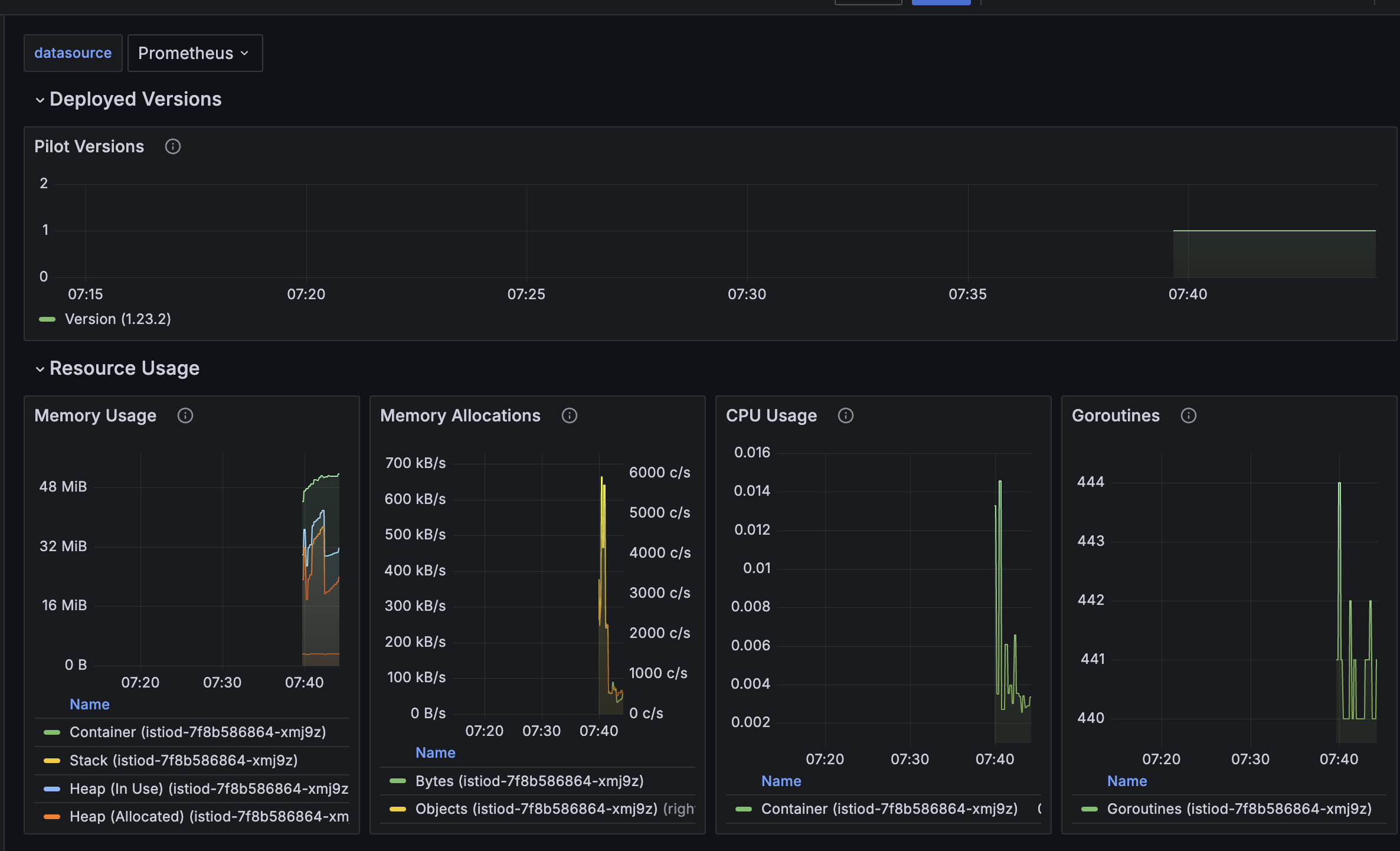

- grafana에 7개의 대시보드 확인

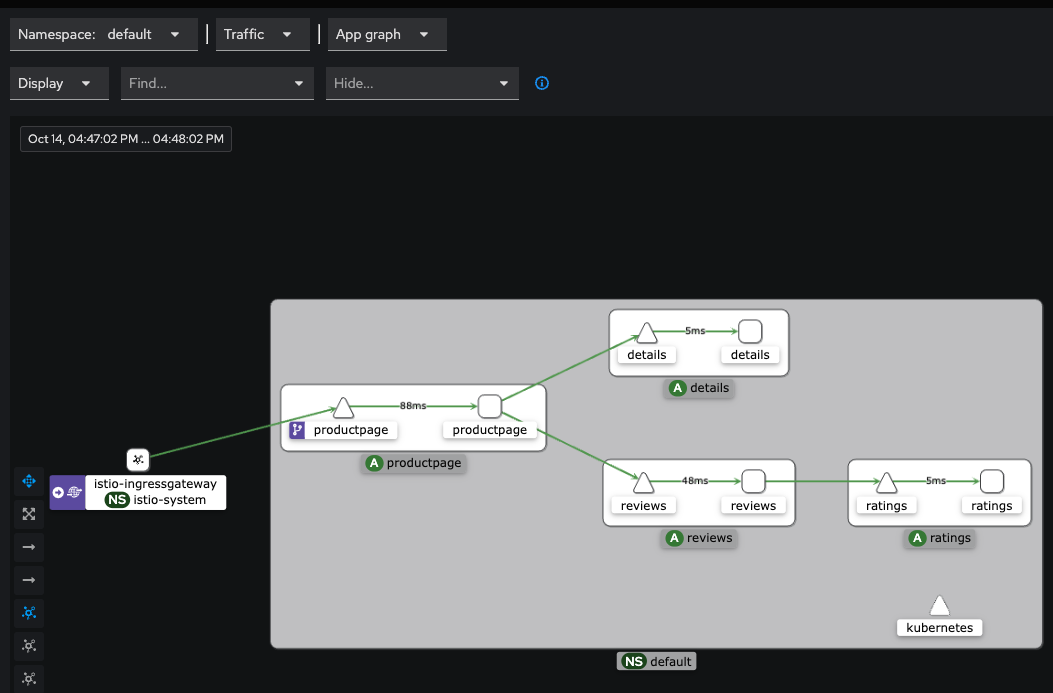

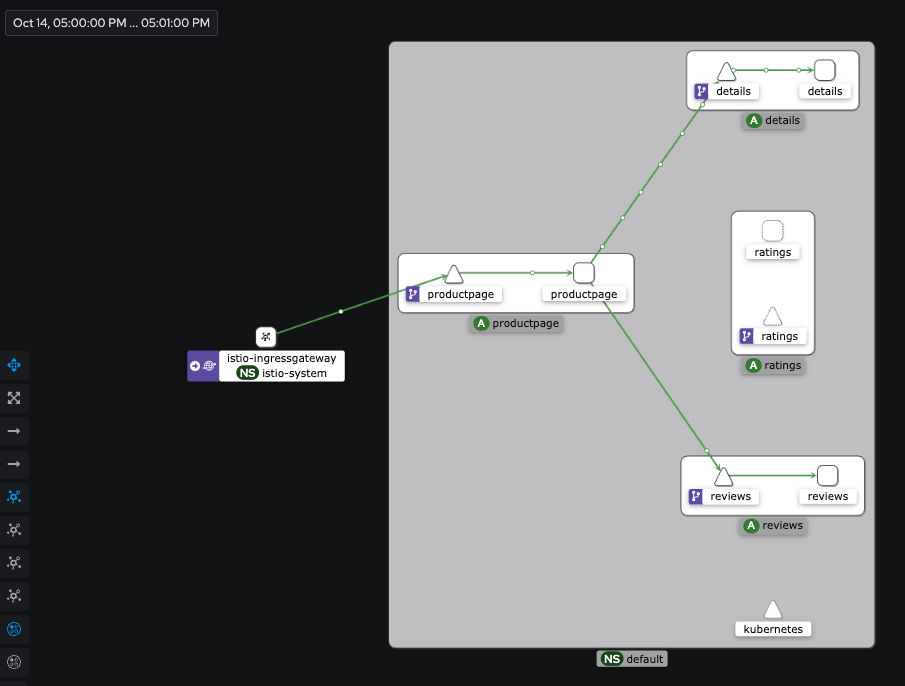

- Kaili 웹 접근

7. Istio Traffic Management

거의다 왔습니다. 결국 L7 영역을 지배하는 건 해당 Layer에 트래픽 또한 관리를 해야한다는 것이고 그렇다면 Istio에서는 어떤식으로 동작을 제어 할 수 있는지 알아보겠습니다.

먼저 서비스의 Traffic 흐름은 아래와 같습니다.

클라이언트 PC → Istio ingressgateway 파드 → (Gateway, VirtualService + DestinationRule) → Cluster(Endpoint - 파드)

- Request Routing 설정

# 샘플 파일들 확인

$ cd ~/istio-$ISTIOV/samples/bookinfo/networking

$ tree

.

├── bookinfo-gateway.yaml

├── certmanager-gateway.yaml

├── destination-rule-all-mtls.yaml

├── destination-rule-all.yaml

├── destination-rule-reviews.yaml

├── egress-rule-google-apis.yaml

├── fault-injection-details-v1.yaml

├── virtual-service-all-v1.yaml

├── virtual-service-details-v2.yaml

├── virtual-service-ratings-db.yaml

├── virtual-service-ratings-mysql-vm.yaml

├── virtual-service-ratings-mysql.yaml

├── virtual-service-ratings-test-abort.yaml

├── virtual-service-ratings-test-delay.yaml

├── virtual-service-reviews-50-v3.yaml

├── virtual-service-reviews-80-20.yaml

├── virtual-service-reviews-90-10.yaml

├── virtual-service-reviews-jason-v2-v3.yaml

├── virtual-service-reviews-test-v2.yaml

├── virtual-service-reviews-v2-v3.yaml

└── virtual-service-reviews-v3.yaml

# 기본 DestinationRule 적용

$ kubectl apply -f destination-rule-all.yaml

destinationrule.networking.istio.io/productpage created

destinationrule.networking.istio.io/reviews created

destinationrule.networking.istio.io/ratings created

destinationrule.networking.istio.io/details created

# DestinationRule 확인 dr(=destinationrules) : KIALI Services 확인 시 GW, VS, DR 확인

$ kubectl get dr

NAME HOST AGE

details details 16m

productpage productpage 16m

ratings ratings 16m

reviews reviews 16m

# istio vs(virtualservices) 확인

$ kubectl get vs

NAME GATEWAYS HOSTS AGE

bookinfo ["bookinfo-gateway"] ["*"] 38m

# 모든 마이크로서비스에 대해 v1 의 서브셋(subset) 에 전송되게 virtualservices 적용

$ kubectl apply -f virtual-service-all-v1.yaml

# istio vs(virtualservices) 확인 >> KIALI 에서 reviews v2,v3 향하는 트래픽 경로가 사라진다!

$ kubectl get virtualservices

NAME GATEWAYS HOSTS AGE

bookinfo ["bookinfo-gateway"] ["*"] 39m

details ["details"] 26s

productpage ["productpage"] 26s

ratings ["ratings"] 26s

reviews ["reviews"] 26s- 캡쳐화면이라 자세히 보이진 않지만 트래픽이 한쪽으로 몰림

- v2 로 접근

# 모든 마이크로서비스에 대해 v1 의 서브셋(subset) 에 전송되게 virtualservices 적용

$ **kubectl apply -f virtual-service-reviews-test-v2.yaml**

# jason 로그인 시 로그 확인

$ **kubetail -l app=productpage -f

[productpage-v1-d5789fdfb-xbvxm productpage] DEBUG:urllib3.connectionpool:Starting new HTTP connection (1): reviews:9080

[productpage-v1-d5789fdfb-xbvxm productpage] send: b'GET /reviews/0 HTTP/1.1\r\nHost: reviews:9080\r\nuser-agent: curl/8.4.0\r\nAccept-Encoding: gzip, deflate\r\nAccept: */*\r\nConnection: keep-alive\r\nx-request-id: 51d509ba-cd98-9731-885c-36609a26bd8c\r\n\r\n'

[productpage-v1-d5789fdfb-xbvxm productpage] reply: 'HTTP/1.1 200 OK\r\n'

[productpage-v1-d5789fdfb-xbvxm productpage] header: x-powered-by: Servlet/3.1

[productpage-v1-d5789fdfb-xbvxm productpage] header: content-type: application/json

[productpage-v1-d5789fdfb-xbvxm productpage] header: date: Mon, 14 Oct 2024 08:04:01 GMT

[productpage-v1-d5789fdfb-xbvxm productpage] header: content-language: en-US

[productpage-v1-d5789fdfb-xbvxm productpage] header: content-length: 358

[productpage-v1-d5789fdfb-xbvxm productpage] header: x-envoy-upstream-service-time: 4

[productpage-v1-d5789fdfb-xbvxm productpage] header: server: envoy

[productpage-v1-d5789fdfb-xbvxm productpage] DEBUG:urllib3.connectionpool:http://reviews:9080 "GET /reviews/0 HTTP/1.1" 200 358

[productpage-v1-d5789fdfb-xbvxm productpage] INFO:werkzeug:::ffff:127.0.0.6 - - [14/Oct/2024 08:04:01] "GET /productpage HTTP/1.1" 200 -

[productpage-v1-d5789fdfb-xbvxm productpage] INFO:werkzeug:::1 - - [14/Oct/2024 08:04:04] "GET /metrics HTTP/1.1" 200 -

[productpage-v1-d5789fdfb-xbvxm productpage] INFO:werkzeug:::1 - - [14/Oct/2024 08:04:19] "GET /metrics HTTP/1.1" 200 -**- sign in을 통해 로그인 후 새로고침하고 대시보드 확인

- 추가적으로 테스트 해 볼 기능

- Traffic Shifting: 배포전략 시 적용하면 좋을 기능으로 트래픽 분산제어를 %기반으로 지원합니다.

- Circuit Breaking: 서비스 장애로 인한 영향 확산 시 해당 서비스 차단을 위해 사용됩니다.

7. Istio 트래픽 흐름

- Istio를 사용한다면 장점도 있지만 당연히 단점도 따라옵니다. 모든 서비스를 envoy proxy를 통해 트래픽이 전달되는 만큼 네트워크 플로우의 한 홉이 더 생기는 것입니다.

- 이러한 이슈로 처음 ServiceMesh를 접할때 복잡한 구조와 서비스 지연으로 인해 다방면으로 트러블슈팅을 해야하는 이슈도 있습니다.

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-app

spec:

terminationGracePeriodSeconds: 0

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-nginx

spec:

ports:

- name: svc-nginx

port: 80

targetPort: 80

selector:

app: nginx-app

type: ClusterIP

---

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: test-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: nginx-service

spec:

hosts:

- "www.naver.com"

gateways:

- test-gateway

http:

- route:

- destination:

host: svc-nginx

port:

number: 80

EOF

# 모니터링

$ watch -d "kubectl get svc -n istio-system -l app=istio-ingressgateway;echo;kubectl get pod -n istio-system -o wide -l app=istio-ingressgateway;echo;kubectl get pod -owide nginx-pod"

$ watch -d "kubectl get pod -n istio-system -o wide -l app=istio-ingressgateway;echo;kubectl get pod -owide nginx-pod"

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S)

AGE

istio-ingressgateway NodePort 10.10.200.158 <none> 15021:32608/TCP,80:31355/TCP,443:31041/TCP,31400:304

36/TCP,15443:32650/TCP 3h3m

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE R

EADINESS GATES

istio-ingressgateway-5f9f654d46-mc6xq 1/1 Running 0 3h3m 172.16.2.4 k3s-w1 <none> <

none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 0/2 PodInitializing 0 9s 172.16.0.7 k3s-s <none> <none>

# **testpc 에서 아래 실행

while true; do curl -s curl www.naver.com:31355 grep -o "<title>.*</title>" ; echo "--------------" ; sleep 1; done

# istio-proxy peer 간 mtls 끄기**

cat <<EOF | kubectl create -f -

apiVersion: security.istio.io/v1beta1

kind: **PeerAuthentication**

metadata:

name: "example-workload-policy"

spec:

selector:

matchLabels:

app: nginx-app

portLevelMtls:

80:

mode: DISABLE

EOF

# 어느정도 시간이 흐르고 트래픽이 나가지 않음8. 마치며.

- Service mesh 솔루션은 여러개가 있습니다. Linkerd, Consul 등등… 그 중 이번에는 Istio CLI부터 kubernetes상에 배포와 서비스 흐름과 간단한 트래픽제어까지 알아봤습니다.

- Istio는 이미 많은 유저가 사용하고 사용했기 때문에 많은 정보와 테스트 후기들이 있기 때문에 다른 솔루션들보다는 접근성이 좋은 것 같습니다.

- 아마 업무환경에서 Service mesh를 사용하려면 Application 영역또한 MSA환경으로 전환도 필요하기 때문에 당장은 사용이 어려울 수 있으나, 이번을 계기로 꾸준한 학습의 시작이 될 것 같습니다.

'K8s' 카테고리의 다른 글

| AKS-eks (0) | 2024.11.02 |

|---|---|

| Cilium CNL (1) | 2024.10.26 |

| LoadBalancer(MetalLB) (3) | 2024.10.04 |