식사법

K8s Service 본문

K8S service

0. Yaml

apiVersion: v1

kind: Service

metadata:

name: pod-service

spec:

selector:

app: test

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 31234

type: NodePort- k8s 상에서 통신을 하는 법을 알기 위해서 기본적으로 알아야 하는 부분으로 spec.selector와 spec.ports.port와 spec.ports.targetPort를 알고 있어야 합니다.

- spec.selector.port는 사용자가 접근 할때 사용하는 port로 생각하면 됩니다.

- spec.ports.targetPort는 pod 안에 있는 container에 있는 서비스 port로 생각하면 됩니다.

- spec.ports.nodePort는 pod가 배치되어 있는 노드에 대한 IP와 열려져 있는 nodePort로하여 접근합니다.

1. Service

- k8s에 서비스란 Pod 안에 실행되고 있는 애플리케이션을 네트워크에 노출 시키는 가상의 Component입니다. 이를 통해 애플리케이션과 사용자의 연결를 도와줍니다.

- ClusterIP

k8s에서 통신을 위한 가장 기본적인 Object로 k8s 클러스터 내부에서만 통신이 가능합니다.

ClusterIP로 들어온 내부 트래픽은 해당 Pod의 PodIP:targetPort로 넘겨주어 클러스터 내부에서만 접근이 가능하게 됩니다.

ClusterIP 접근 방법

고정 ClusterIP

Pod IP를 고정으로 사용하여 개발 단계 테스트 시 새로 올라오게 되는 Pod에 대해 고려 하지 않아도 된다.

apiVersion: v1 kind: Service metadata: name: gasida-service spec: selector: app: test ports: - protocol: TCP port: 80 targetPort: 80 clusterIP: 10.96.0.100 # 고정 ClusterIP type: ClusterIP

Domain 주소

- k8s cluster 생성 시 제공되는 coredns로

. .svc.cluster.local 이런 형식으로 도메인주소로 찾아 갈 수 있습니다.

- k8s cluster 생성 시 제공되는 coredns로

- NodePort

- NodPort는 PodIP가 아닌 말 그대로 master node 또는 worker node에 port를 띄워 사용자가 접근 하는 방식으로 NodePort에 범위는 30000 ~ 32767입니다.

- NodePort의 제한점이라고 하면 Port 제한과 NodePort로 하기 때문에 생기는 여러 Node에 대해 자동으로 로드 밸런싱이 될 수가 없다는 제한점이 있습니다. 이를 보완하기 위해 나온 것이 LoadBalancer가 있습니다.

- NodePort IP:Port로 접근하기 때문에 개발 시에 변경되는 IP에 대한 고려도 해야 하고 IP에 노출로 보안상에도 문제가 생깁니다.

apiVersion: v1

kind: Service

metadata:

name: pod-service

spec:

selector:

app: test

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 31234

type: NodePort- LoadBalancer

- 로드 밸런서 타입은 서비스의 EXTERNAL-IP에 할당 된 IP로 외부에서 들어올 수 있는게 하는 서비스 타입입니다.

- cloud에서는 제공해주는 로드밸런스가 있어 생성 시 도메인 형식으로 eks와 같이 cluster에 service를 올리게 되면 도메인 형식으로 접근이 가능합니다.

- 온프렘에 경우에는 metallb등 EXTERNAL-IP를 제공해주는 로드밸런서를 제공합니다.

apiVersion: v1

kind: Service

metadata:

name: service-loadbalancer

spec:

selector:

app: test

ports:

- protocol: TCP

port: 80

targetPort: 80

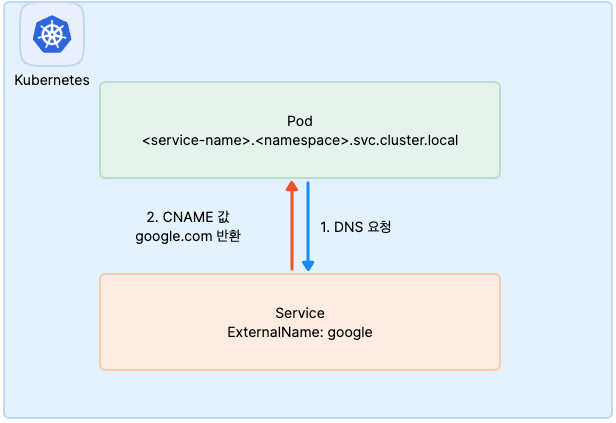

type: LoadBalance - ExternalName

apiVersion: v1

kind: Service

metadata:

name: service-externalname

spec:

type: ExternalName

externalName: google.com- 도메인으로 통신을 하기 때문에 IP:Port가 필요하지 않습니다.

- 외부 Public 도메인(google.com)을 k8s cluster에서 k8s 서비스 이름으로 사용 가능하고

. .svc.cluster.local로도 접근 가능하게 됩니다. - 다른 타입과 다르게 ExternalName 타입은 FQDN 방식(도메인)으로 하기 때문에 spec.selector로 찾아가지 않습니다.

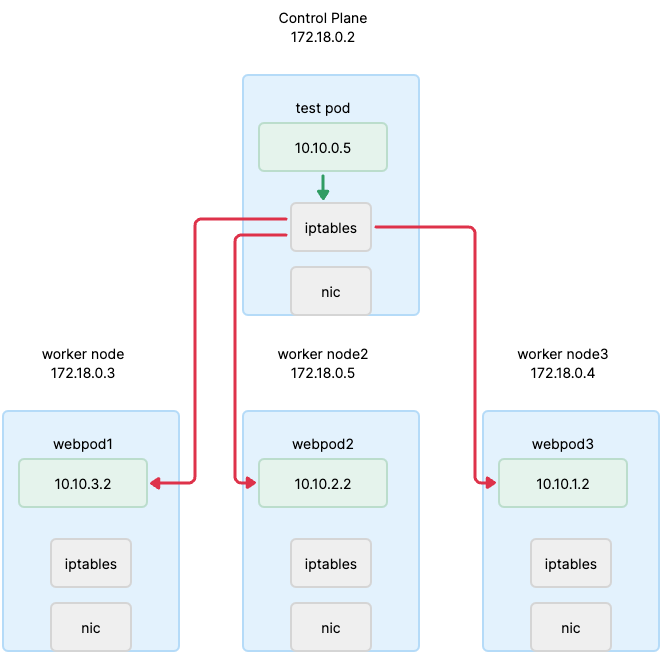

2. Kube-proxy

- kube-proxy는 k8s 클러스터 내의 Service와 Pod 간 통신을 용이하게 해줍니다.즉 Service의 IP로 가는 트래픽이 백엔드에 있는 Pod중 하나로 라우팅 되게 해 줍니다.

2.1 Iptables 프록시 모드

- 작동 방식

- kube-proxy는 netfilter 활용하여 Linux 커널에서 직접 라우팅 규칙을 생성합니다.

- 위에서 말 같이 kube-proxy는 라우팅에 대한 역할은 netfilter가 수행하고 kube-proxy 라우팅에 대한 룰 만 관리하는 설정 만을 담당합니다.

- SPOF(single point of failure) 발생 시 kube-proxy는 데몬셋으로 기동을 하기 때문에 문제가 생기면 Pod 특성 상 자기 올라오게 되는데 이 때 라우팅에 대한 룰은 커널 수준 네트워크 규칙에 의해서 동작하기 때문에 다시 통시 하는 데에는 문제가 없습니다.

2.2 IPVS 프록시 모드

- 작동 방식

- IPVS(IP 가상 서버)는 리눅스 커널에서 제공하는 L4단에 Load balancer로 Service Proxy 역할을 하는 Mode 입니다.

- 위 언급한 Iptables 프록시 모드 보다 성능이 좋은 이유로 다양한 로드 밸런싱 알고리즘을 제공 함으로 써 트래픽 처리량을 항샹 시킬 수 있습니다.

2.3 nftables 프록시 모드

- 리눅스 계열 서버에서만 가능하고 커널 버전은 5.13이상이여야 합니다.

- k8s 1.31에서는 모든 네트워크 플러그인이 호환이 안될 수 도 있다고 합니다.

table ip nat {

chain prerouting {

type nat hook prerouting priority 0; policy accept;

ip saddr 192.168.100.100 tcp dport 30080 dnat to 10.96.0.11:8080

tcp dport 30080 dnat to 10.96.0.10:8080

}

}- 192.168.100.100 ip는 10.96.0.11:8080으로 리다이렉션 되게 룰을 설정

- 30080 포트로 오는것은 10.96.0.11:8080 서비스로 전달

3. 통신의 흐름

- 기본 제공

- 가시다님 yaml 배포

서비스 생성 시 통신 순서

- apiserver → kubelet → kube-proxy → coredns → iptables rule

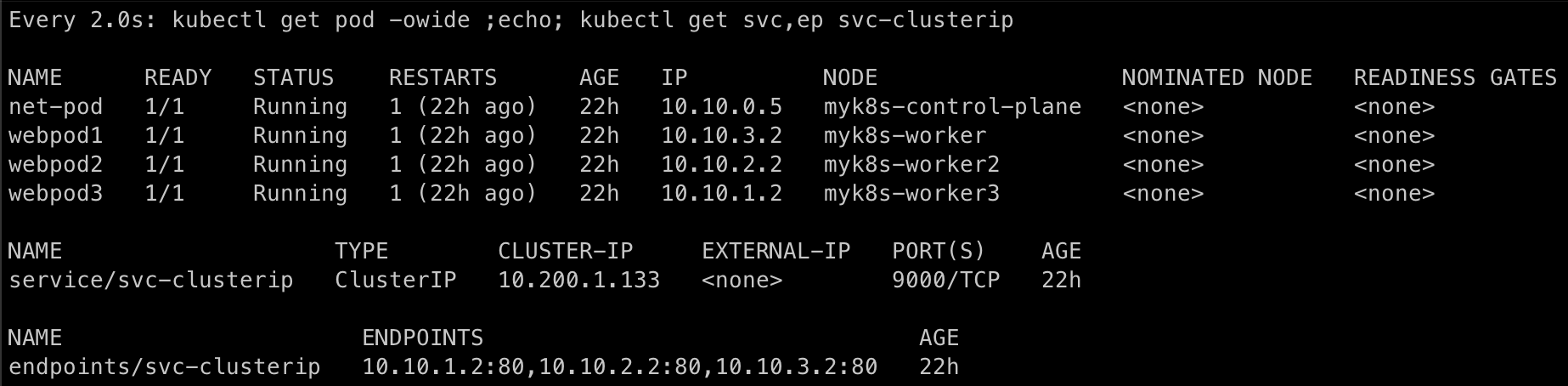

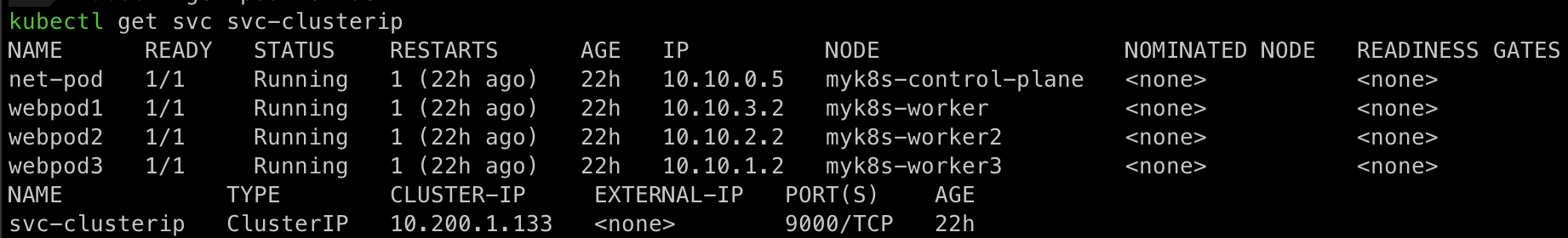

가시다님 yaml 배포 생성 및 확인

모니터링

watch -d 'kubectl get pod -owide ;echo; kubectl get svc,ep svc-clusterip'- -w: watch 기능으로 기본 값은 2초입니다.

- -d: 바뀌는 걸 감지 해주는 옵션입니다.

- -n: 기본 시간인 2초에 대해 변경 할 수 있는 옵션입니다.

생성

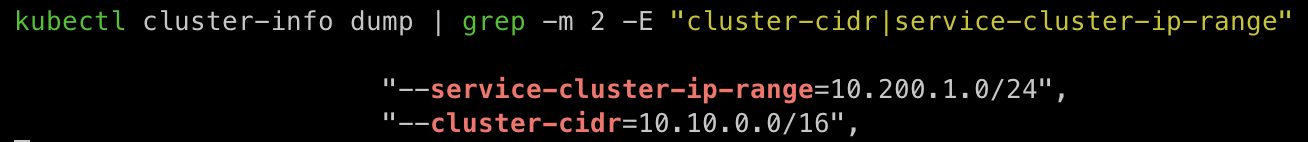

kubectl apply -f **3pod**.yaml,**netpod**.yaml,**svc-clusterip**.yaml파드와 서비스 사용 네트워크 대역 정보 확인

kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

배포 된 오브젝트 확인

service 확인

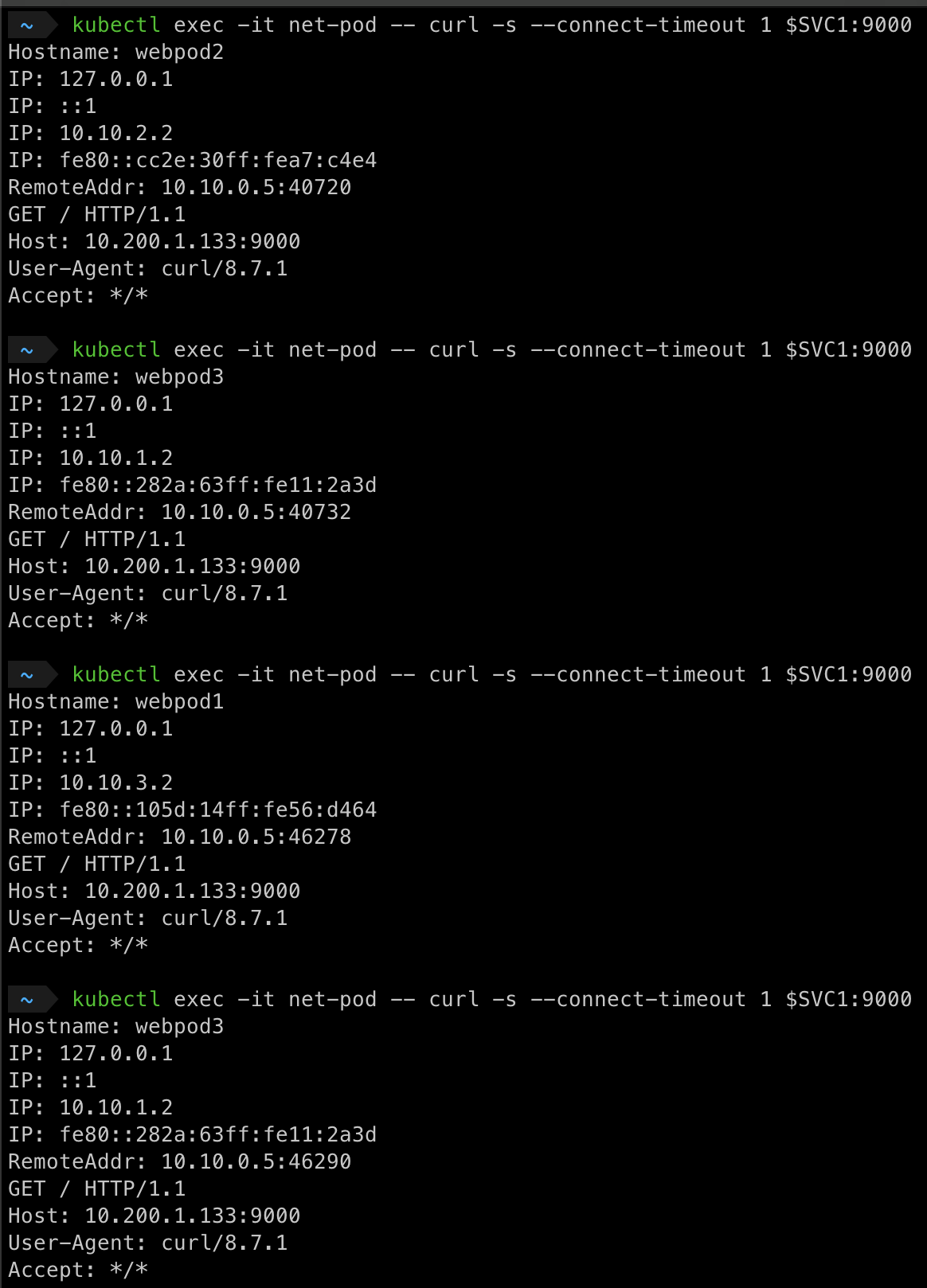

- svc-clusterip → app=webpod가 있는 pod 확인 → webapp1,webapp2,webapp3 라운드 로빈으로 라우팅을 해줍니다.

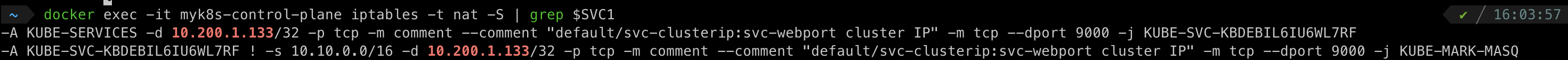

kubectl describe svc svc-clusterip Name: svc-clusterip Namespace: default Labels: <none> Annotations: <none> Selector: app=webpod Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.200.1.133 IPs: 10.200.1.133 Port: svc-webport 9000/TCP TargetPort: 80/TCP #Endpoints #현재 svc에 연결 된 pod를 보여줍니다. Endpoints: 10.10.3.2:80,10.10.1.2:80,10.10.2.2:80 Session Affinity: None Internal Traffic Policy: Cluster Events: <none>kube-proxy에 의해 자동으로 생긴 iptables 규칙 확인

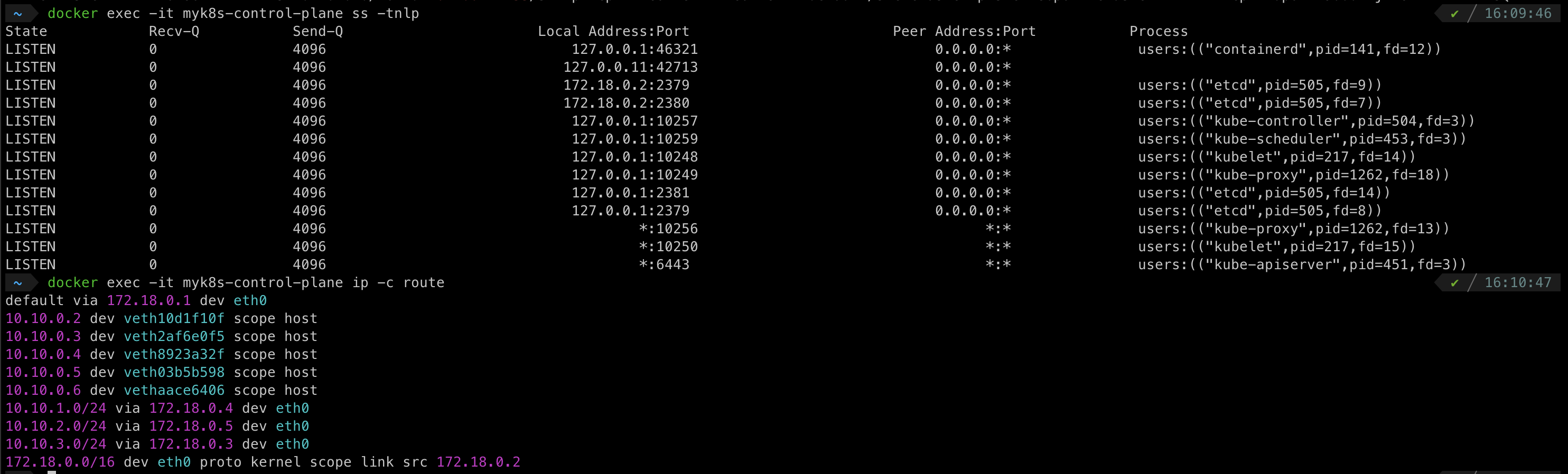

myk8s-control-plane

myk8s-worker

myk8s-worker2

myk8s-worker3

iptables rule로 처리 되는 것을 확인

10.200.1.133/32에 대한 정보가 없음

호출 시 pod가 변경 되는 것을 확인

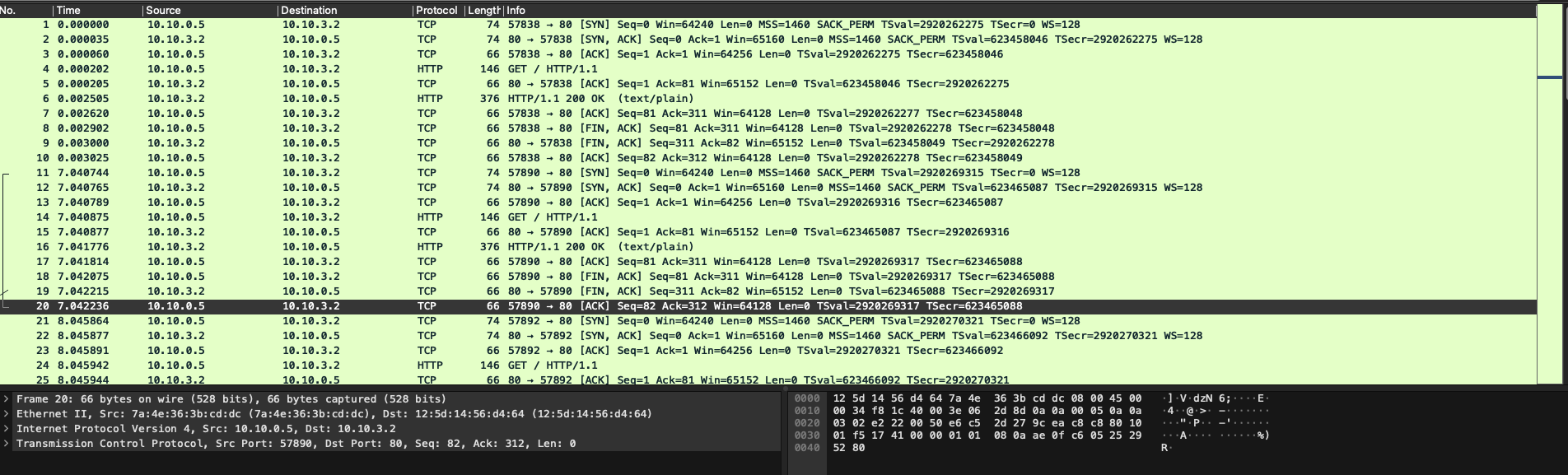

tcp dump wireshark

4. Iptables 정책 확인

- docker exec -it myk8s-worker bash

- nic 정보 확인

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: vetha2cfde2e@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 7a:4e:36:3b:cd:dc brd ff:ff:ff:ff:ff:ff link-netns cni-f7bd565b-cf2e-078e-23e1-aa9b9d201d79

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.1.0/24 via 172.18.0.4 dev eth0

10.10.2.0/24 via 172.18.0.5 dev eth0

10.10.3.2 dev vetha2cfde2e scope host

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: vetha2cfde2e@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 7a:4e:36:3b:cd:dc brd ff:ff:ff:ff:ff:ff link-netns cni-f7bd565b-cf2e-078e-23e1-aa9b9d201d79

inet 10.10.3.1/32 scope global vetha2cfde2e

valid_lft forever preferred_lft forever

inet6 fe80::784e:36ff:fe3b:cddc/64 scope link

valid_lft forever preferred_lft forever

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever- docker exec -it myk8s-worker2 bash

- nic 정보 확인

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: vethab6d3911@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 4a:9c:7f:63:60:13 brd ff:ff:ff:ff:ff:ff link-netns cni-1268eba7-f13d-9bcf-e768-eb0b7d743a3e

11: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:12:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.1.0/24 via 172.18.0.4 dev eth0

10.10.2.2 dev vethab6d3911 scope host

10.10.3.0/24 via 172.18.0.3 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.5

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: vethab6d3911@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 4a:9c:7f:63:60:13 brd ff:ff:ff:ff:ff:ff link-netns cni-1268eba7-f13d-9bcf-e768-eb0b7d743a3e

inet 10.10.2.1/32 scope global vethab6d3911

valid_lft forever preferred_lft forever

inet6 fe80::489c:7fff:fe63:6013/64 scope link

valid_lft forever preferred_lft forever

11: eth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.5/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::5/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:5/64 scope link

valid_lft forever preferred_lft forever- docker exec -it myk8s-worker3 bash

- nic 정보 확인

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: vethd783131c@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 5a:80:a2:df:8b:53 brd ff:ff:ff:ff:ff:ff link-netns cni-4a58a924-b77b-6de8-a5a2-43302aa40568

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.1.2 dev vethd783131c scope host

10.10.2.0/24 via 172.18.0.5 dev eth0

10.10.3.0/24 via 172.18.0.3 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.4

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: vethd783131c@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 5a:80:a2:df:8b:53 brd ff:ff:ff:ff:ff:ff link-netns cni-4a58a924-b77b-6de8-a5a2-43302aa40568

inet 10.10.1.1/32 scope global vethd783131c

valid_lft forever preferred_lft forever

inet6 fe80::5880:a2ff:fedf:8b53/64 scope link

valid_lft forever preferred_lft forever

9: eth0@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::4/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:4/64 scope link

valid_lft forever preferred_lft forever- iptable 확인

root@myk8s-control-plane:/# iptables -t filter -S

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-PROXY-CANARY

-N KUBE-PROXY-FIREWALL

-N KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPTroot@myk8s-control-plane:/# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N DOCKER_OUTPUT

-N DOCKER_POSTROUTING

-N KIND-MASQ-AGENT

-N KUBE-EXT-7EJNTS7AENER2WX5

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-2TLZC6QOUTI37HEJ

-N KUBE-SEP-6GODNNVFRWQ66GUT

-N KUBE-SEP-DOIEFYKPESCDTYCH

-N KUBE-SEP-F2ZDTMFKATSD3GWE

-N KUBE-SEP-LDNVFCFK3DZMY5OJ

-N KUBE-SEP-OEOVYBFUDTUCKBZR

-N KUBE-SEP-P4UV4WHAETXYCYLO

-N KUBE-SEP-TBW2IYJKUCAC7GB3

-N KUBE-SEP-V2PECCYPB6X2GSCW

-N KUBE-SEP-XWEOB3JN6VI62DQQ

-N KUBE-SEP-ZEA5VGCBA2QNA7AK

-N KUBE-SERVICES

-N KUBE-SVC-7EJNTS7AENER2WX5

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-KBDEBIL6IU6WL7RF

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -d 192.168.5.2/32 -j DOCKER_OUTPUT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -d 192.168.5.2/32 -j DOCKER_OUTPUT

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -d 192.168.5.2/32 -j DOCKER_POSTROUTING

-A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT

-A DOCKER_OUTPUT -d 192.168.5.2/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:42713

-A DOCKER_OUTPUT -d 192.168.5.2/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:37139

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 42713 -j SNAT --to-source 192.168.5.2:53

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 37139 -j SNAT --to-source 192.168.5.2:53

-A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN

-A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE

-A KUBE-EXT-7EJNTS7AENER2WX5 -m comment --comment "masquerade traffic for kube-system/kube-ops-view:http external destinations" -j KUBE-MARK-MASQ

-A KUBE-EXT-7EJNTS7AENER2WX5 -j KUBE-SVC-7EJNTS7AENER2WX5

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-NODEPORTS -d 127.0.0.0/8 -p tcp -m comment --comment "kube-system/kube-ops-view:http" -m tcp --dport 30000 -m nfacct --nfacct-name localhost_nps_accepted_pkts -j KUBE-EXT-7EJNTS7AENER2WX5

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/kube-ops-view:http" -m tcp --dport 30000 -j KUBE-EXT-7EJNTS7AENER2WX5

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-2TLZC6QOUTI37HEJ -s 10.10.3.2/32 -m comment --comment "default/svc-clusterip:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-2TLZC6QOUTI37HEJ -p tcp -m comment --comment "default/svc-clusterip:svc-webport" -m tcp -j DNAT --to-destination 10.10.3.2:80

-A KUBE-SEP-6GODNNVFRWQ66GUT -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-6GODNNVFRWQ66GUT -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.3:9153

-A KUBE-SEP-DOIEFYKPESCDTYCH -s 10.10.2.2/32 -m comment --comment "default/svc-clusterip:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-DOIEFYKPESCDTYCH -p tcp -m comment --comment "default/svc-clusterip:svc-webport" -m tcp -j DNAT --to-destination 10.10.2.2:80

-A KUBE-SEP-F2ZDTMFKATSD3GWE -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-F2ZDTMFKATSD3GWE -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-LDNVFCFK3DZMY5OJ -s 10.10.0.2/32 -m comment --comment "kube-system/kube-ops-view:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-LDNVFCFK3DZMY5OJ -p tcp -m comment --comment "kube-system/kube-ops-view:http" -m tcp -j DNAT --to-destination 10.10.0.2:8080

-A KUBE-SEP-OEOVYBFUDTUCKBZR -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-OEOVYBFUDTUCKBZR -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-P4UV4WHAETXYCYLO -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-P4UV4WHAETXYCYLO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.4:9153

-A KUBE-SEP-TBW2IYJKUCAC7GB3 -s 10.10.1.2/32 -m comment --comment "default/svc-clusterip:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-TBW2IYJKUCAC7GB3 -p tcp -m comment --comment "default/svc-clusterip:svc-webport" -m tcp -j DNAT --to-destination 10.10.1.2:80

-A KUBE-SEP-V2PECCYPB6X2GSCW -s 172.18.0.2/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-V2PECCYPB6X2GSCW -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.2:6443

-A KUBE-SEP-XWEOB3JN6VI62DQQ -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-XWEOB3JN6VI62DQQ -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -d 10.200.1.196/32 -p tcp -m comment --comment "kube-system/kube-ops-view:http cluster IP" -m tcp --dport 8080 -j KUBE-SVC-7EJNTS7AENER2WX5

-A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 10.200.1.133/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-7EJNTS7AENER2WX5 ! -s 10.10.0.0/16 -d 10.200.1.196/32 -p tcp -m comment --comment "kube-system/kube-ops-view:http cluster IP" -m tcp --dport 8080 -j KUBE-MARK-MASQ

-A KUBE-SVC-7EJNTS7AENER2WX5 -m comment --comment "kube-system/kube-ops-view:http -> 10.10.0.2:8080" -j KUBE-SEP-LDNVFCFK3DZMY5OJ

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-ZEA5VGCBA2QNA7AK

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.4:53" -j KUBE-SEP-F2ZDTMFKATSD3GWE

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.3:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-6GODNNVFRWQ66GUT

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.4:9153" -j KUBE-SEP-P4UV4WHAETXYCYLO

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.133/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

-A KUBE-SVC-KBDEBIL6IU6WL7RF -m comment --comment "default/svc-clusterip:svc-webport -> 10.10.1.2:80" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-TBW2IYJKUCAC7GB3

-A KUBE-SVC-KBDEBIL6IU6WL7RF -m comment --comment "default/svc-clusterip:svc-webport -> 10.10.2.2:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DOIEFYKPESCDTYCH

-A KUBE-SVC-KBDEBIL6IU6WL7RF -m comment --comment "default/svc-clusterip:svc-webport -> 10.10.3.2:80" -j KUBE-SEP-2TLZC6QOUTI37HEJ

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.2:6443" -j KUBE-SEP-V2PECCYPB6X2GSCW

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XWEOB3JN6VI62DQQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.4:53" -j KUBE-SEP-OEOVYBFUDTUCKBZRroot@myk8s-control-plane:/# iptables -t mangle -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-N KUBE-PROXY-CANARY5. sessionAffinity: ClientIP

- sessionAffinity는 클라이언트가 한번 접근 된 pod에 대해 계속하여 접근이 되게 합니다.

- 접근이 유지 되는 시간도 따로 설정이 가능합니다.

apiVersion: v1

kind: Service

metadata:

name: my-service

namespace: default

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800 기본값6. NodePort

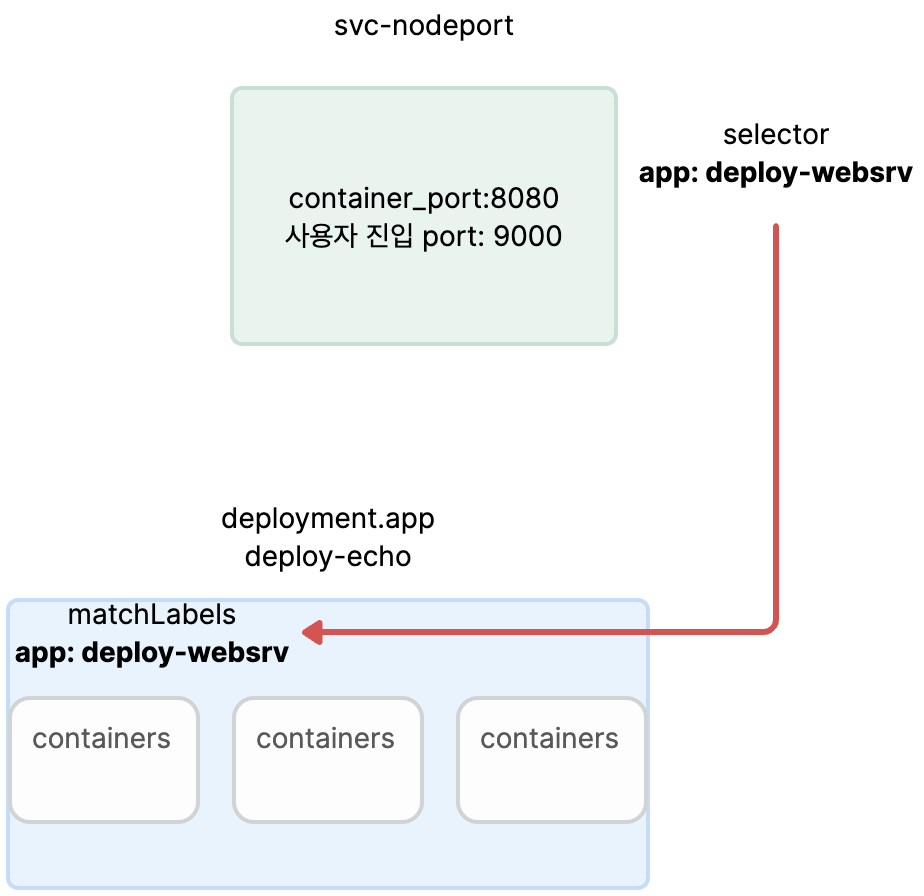

- NodePort는 첫 진입점이 node(worke server)에 IP:port로 접근을 합니다.

- NodePort는 node:port로 진입 이후에는 ClusterIP와 동일 하게 kube-proxy에 의해 만들어진 iptable에 영향을 받아 트래픽 흐름을 갖습니다.

- 가시다님 deploymaent.app 파일 sample

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-echo

spec:

replicas: 3

selector:

matchLabels:

app: deploy-websrv

template:

metadata:

labels:

app: deploy-websrv

spec:

terminationGracePeriodSeconds: 0

containers:

- name: kans-websrv

image: mendhak/http-https-echo

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: svc-nodeport

spec:

ports:

- name: svc-webport

port: 9000

targetPort: 8080

selector:

app: deploy-websrv

type: NodePort

EOT- deployment 생성

**kubectl apply -f echo-deploy.yaml,svc-nodeport.yaml**

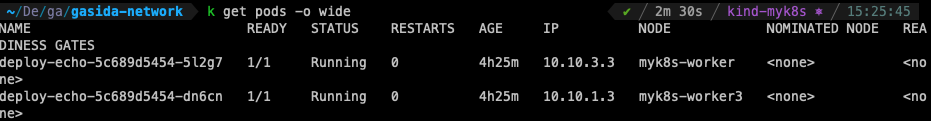

- deploy-echo service 정보 확인

kubectl get svc svc-nodeport

사용자 -> nodePort: 30716 -> svc-port: 9000 -> pod-port: 8080

- endpoint 확인

kubectl get endpoints svc-nodeport

- 서비스(NodePort) 부하분산 접속 확인

NPORT=$(kubectl get service svc-nodeport -o jsonpath='{.spec.ports[0].nodePort}')

30716

CNODE=172.18.0.2

NODE1=172.18.0.3

NODE2=172.18.0.5

NODE3=172.18.0.4

docker exec -it mypc curl -s $CNODE:$NPORT | jq # headers.host 주소는 왜 그런거죠?

for i in $CNODE $NODE1 $NODE2 $NODE3 ; do echo ">> node $i <<"; docker exec -it mypc curl -s $i:$NPORT; echo; done

>> node 172.18.0.2 <<

{

"path": "/",

"headers": {

"host": "172.18.0.2:30716",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.2",

"ip": "::ffff:172.18.0.2",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-5l2g7"

},

"connection": {}

}

>> node 172.18.0.3 <<

{

"path": "/",

"headers": {

"host": "172.18.0.3:30716",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.3",

"ip": "::ffff:10.10.3.1",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-5l2g7"

},

"connection": {}

}

>> node 172.18.0.5 <<

{

"path": "/",

"headers": {

"host": "172.18.0.5:30716",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.5",

"ip": "::ffff:10.10.2.1",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-s27kn"

},

"connection": {}

}

>> node 172.18.0.4 <<

{

"path": "/",

"headers": {

"host": "172.18.0.4:30716",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.4",

"ip": "::ffff:172.18.0.4",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-5l2g7"

},

"connection": {}

}headers.host 주소는 각 NODE_IP:Node_PORT로 진입 시 iptable 규칙에 의해 진입이 되어서 표기 됩니다.

컨트롤플레인 노드에는 목적지 파드가 없는데도 접속을 받아준다! 이유는?

docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $**CNODE**:$NPORT | grep hostname; done | sort | uniq -c | sort -nr"

docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $**NODE1**:$NPORT | grep hostname; done | sort | uniq -c | sort -nr"

docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $**NODE2**:$NPORT | grep hostname; done | sort | uniq -c | sort -nr"

docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $**NODE3**:$NPORT | grep hostname; done | sort | uniq -c | sort -nr"

100 "hostname": "172.18.0.2",

42 "hostname": "deploy-echo-5c689d5454-dn6cn"

34 "hostname": "deploy-echo-5c689d5454-s27kn"

24 "hostname": "deploy-echo-5c689d5454-5l2g7"

100 "hostname": "172.18.0.3",

35 "hostname": "deploy-echo-5c689d5454-s27kn"

34 "hostname": "deploy-echo-5c689d5454-dn6cn"

31 "hostname": "deploy-echo-5c689d5454-5l2g7"

100 "hostname": "172.18.0.5",

42 "hostname": "deploy-echo-5c689d5454-s27kn"

37 "hostname": "deploy-echo-5c689d5454-dn6cn"

21 "hostname": "deploy-echo-5c689d5454-5l2g7"

100 "hostname": "172.18.0.4",

35 "hostname": "deploy-echo-5c689d5454-s27kn"

34 "hostname": "deploy-echo-5c689d5454-dn6cn"

31 "hostname": "deploy-echo-5c689d5454-5l2g7"이유는 모든 클러스터 내에 띄워져 있는 kube-proxy가 있어 서비스 생성 시 룰에 의해 요청이 있을 때 해당 요청에 부합하는 것(nodePort)을 찾아줍니다.

myk8s-control-plane CLUSTER-IP:PORT 로 접속 가능할까? ⇒ 가능

mypc에서 CLUSTER-IP:PORT 로 접속 가능할까? ⇒ 불가능⇒ 이유는 master node는 쿠버네티스 클러스터에 속해 있어서 가능하고 ClusterIP에 경우는 k8s 클러스터 안에서만 통신이 가능하다 mypc docker container에 경우는 k8s에 속해 있지 않아 통신이 불가 하게 됩니다.

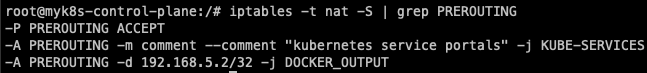

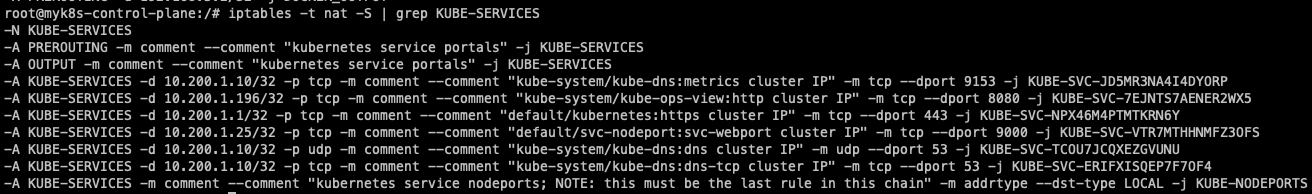

6.1 Iptable 정책 확인

iptables -t nat -S | grep PREROUTING

iptables -t nat -S | grep KUBE-SERVICES

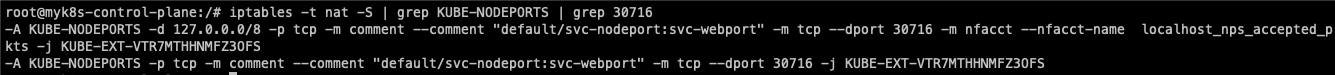

iptables -t nat -S | grep KUBE-NODEPORTS | grep 30716

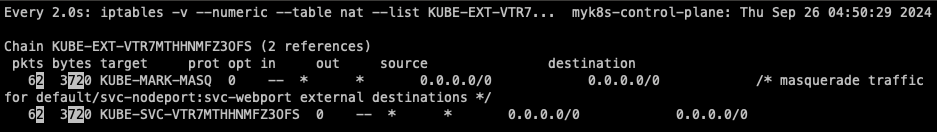

watch -d 'iptables -v --numeric --table nat --list KUBE-EXT-VTR7MTHHNMFZ3OFS'

iptables -t nat -S | grep "A KUBE-EXT-VTR7MTHHNMFZ3OFS"

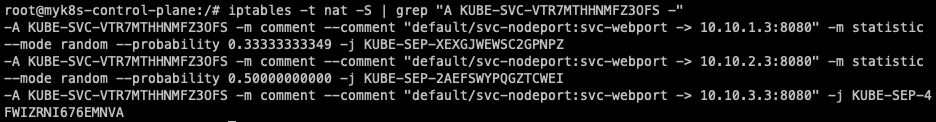

iptables -t nat -S | grep "A KUBE-SVC-VTR7MTHHNMFZ3OFS -"

iptables -t nat -S | grep KUBE-SEP-XEXGJWEWSC2GPNPZ

-N KUBE-SEP-XEXGJWEWSC2GPNPZ

-A KUBE-SEP-XEXGJWEWSC2GPNPZ -s 10.10.1.3/32 -m comment --comment "default/svc-nodeport:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-XEXGJWEWSC2GPNPZ -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp -j DNAT --to-destination 10.10.1.3:8080

-A KUBE-SVC-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.1.3:8080" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-XEXGJWEWSC2GPNPZ

iptables -t nat -S | grep KUBE-SEP-2AEFSWYPQGZTCWEI

-N KUBE-SEP-2AEFSWYPQGZTCWEI

-A KUBE-SEP-2AEFSWYPQGZTCWEI -s 10.10.2.3/32 -m comment --comment "default/svc-nodeport:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-2AEFSWYPQGZTCWEI -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp -j DNAT --to-destination 10.10.2.3:8080

-A KUBE-SVC-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.2.3:8080" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-2AEFSWYPQGZTCWEI

iptables -t nat -S | grep KUBE-SEP-4FWIZRNI676EMNVA

-N KUBE-SEP-4FWIZRNI676EMNVA

-A KUBE-SEP-4FWIZRNI676EMNVA -s 10.10.3.3/32 -m comment --comment "default/svc-nodeport:svc-webport" -j KUBE-MARK-MASQ

-A KUBE-SEP-4FWIZRNI676EMNVA -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp -j DNAT --to-destination 10.10.3.3:8080

-A KUBE-SVC-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.3.3:8080" -j KUBE-SEP-4FWIZRNI676EMNVA

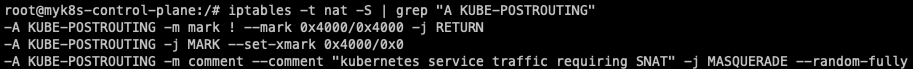

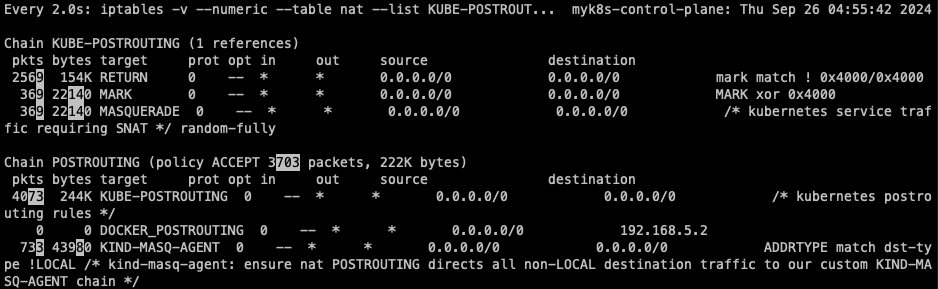

iptables -t nat -S | grep "A KUBE-POSTROUTING"

watch -d 'iptables -v --numeric --table nat --list KUBE-POSTROUTING;echo;iptables -v --numeric --table nat --list POSTROUTING'

7. externalTrafficPolicy

- externalTrafficPolicy

Cluster인 경우 고정 IP일 필요가 없거나 특정 노드에서 Pod가 뜨지 않고 모든 노드에 떠도 되는 경우 사용 가능합니다. - local*인 경우 고정 IP를 사용하고 이유는 nodePort 접근 시 해당 pod가 있는 곳만 접근이 가능합니다.

local mode

apiVersion: v1

kind: Service

metadata:

name: local-service

spec:

type: LoadBalancer

externalTrafficPolicy: Local

ports:

- port: 80

targetPort: 8080

selector:

app: test-app

Cluster mode

apiVersion: v1

kind: Service

metadata:

name: cluster-service

spec:

type: LoadBalancer

externalTrafficPolicy: Cluster

ports:

- port: 80

targetPort: 8080

selector:

app: test-appdocker exec -it mypc curl -s --connect-timeout 1 $CNODE:$NPORT | jq ✔ ╱ 15:10:35

for i in $CNODE $NODE1 $NODE2 $NODE3 ; do echo ">> node $i <<"; docker exec -it mypc curl -s --connect-timeout 1 $i:$NPORT; echo; done

>> node 172.18.0.2 <<

>> node 172.18.0.3 <<

{

"path": "/",

"headers": {

"host": "172.18.0.3:32040",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.3",

"ip": "::ffff:172.18.0.100",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-5l2g7"

},

"connection": {}

}

>> node 172.18.0.5 <<

>> node 172.18.0.4 <<

{

"path": "/",

"headers": {

"host": "172.18.0.4:32040",

"user-agent": "curl/8.7.1",

"accept": "*/*"

},

"method": "GET",

"body": "",

"fresh": false,

"hostname": "172.18.0.4",

"ip": "::ffff:172.18.0.100",

"ips": [],

"protocol": "http",

"query": {},

"subdomains": [],

"xhr": false,

"os": {

"hostname": "deploy-echo-5c689d5454-dn6cn"

},

"connection": {}

}- pod가 있는 노드에서만 통신이 가능합니다.

docker exec -it mypc zsh -c "while true; do curl -s --connect-timeout 1 $NODE3:$NPORT | grep hostname; date '+%Y-%m-%d %H:%M:%S' ; echo ; sleep 1; done"

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:42

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:43

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:44

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:45

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:46

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:47

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:48

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:49

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:50

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:51

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:52

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:53

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:54

"hostname": "172.18.0.4",

"hostname": "deploy-echo-5c689d5454-dn6cn"

2024-09-26 06:19:557.1 Iptables

실습 예제에는 work2 이지만 실제 실습에서는 work1과 work3에 pod가 배치되어 work3에서 확인

iptables -t nat -S | grep 32040

-A KUBE-NODEPORTS -d 127.0.0.0/8 -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp --dport 32040 -m nfacct --nfacct-name localhost_nps_accepted_pkts -j KUBE-EXT-VTR7MTHHNMFZ3OFS

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp --dport 32040 -j KUBE-EXT-VTR7MTHHNMFZ3OFSiptables -t nat -S | grep 'A KUBE-EXT-VTR7MTHHNMFZ3OFS'

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -s 10.10.0.0/16 -m comment --comment "pod traffic for default/svc-nodeport:svc-webport external destinations" -j KUBE-SVC-VTR7MTHHNMFZ3OFS

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "masquerade LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-MARK-MASQ

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "route LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-SVC-VTR7MTHHNMFZ3OFSiptables -t nat -S | grep 'A KUBE-EXT-VTR7MTHHNMFZ3OFS'

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -s 10.10.0.0/16 -m comment --comment "pod traffic for default/svc-nodeport:svc-webport external destinations" -j KUBE-SVC-VTR7MTHHNMFZ3OFS

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "masquerade LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-MARK-MASQ

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "route LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-SVC-VTR7MTHHNMFZ3OFS

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -j KUBE-SVL-VTR7MTHHNMFZ3OFSiptables -t nat -S | grep 'A KUBE-SVL-VTR7MTHHNMFZ3OFS'

-A KUBE-SVL-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.1.3:8080" -j KUBE-SEP-XEXGJWEWSC2GPNPZmyk8s-control-plane

iptables -t nat -S | grep 32040

-A KUBE-NODEPORTS -d 127.0.0.0/8 -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp --dport 32040 -m nfacct --nfacct-name localhost_nps_accepted_pkts -j KUBE-EXT-VTR7MTHHNMFZ3OFS

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/svc-nodeport:svc-webport" -m tcp --dport 32040 -j KUBE-EXT-VTR7MTHHNMFZ3OFSiptables -t nat -S | grep 'A KUBE-EXT-VTR7MTHHNMFZ3OFS'

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -s 10.10.0.0/16 -m comment --comment "pod traffic for default/svc-nodeport:svc-webport external destinations" -j KUBE-SVC-VTR7MTHHNMFZ3OFS

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "masquerade LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-MARK-MASQ

-A KUBE-EXT-VTR7MTHHNMFZ3OFS -m comment --comment "route LOCAL traffic for default/svc-nodeport:svc-webport external destinations" -m addrtype --src-type LOCAL -j KUBE-SVC-VTR7MTHHNMFZ3OFSiptables -t nat -S | grep 'A KUBE-SVC-VTR7MTHHNMFZ3OFS'

-A KUBE-SVC-VTR7MTHHNMFZ3OFS ! -s 10.10.0.0/16 -d 10.200.1.29/32 -p tcp -m comment --comment "default/svc-nodeport:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

-A KUBE-SVC-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.1.3:8080" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XEXGJWEWSC2GPNPZ

-A KUBE-SVC-VTR7MTHHNMFZ3OFS -m comment --comment "default/svc-nodeport:svc-webport -> 10.10.3.3:8080" -j KUBE-SEP-4FWIZRNI676EMNVA8. EndpointSlice

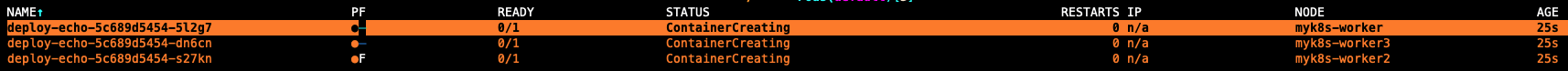

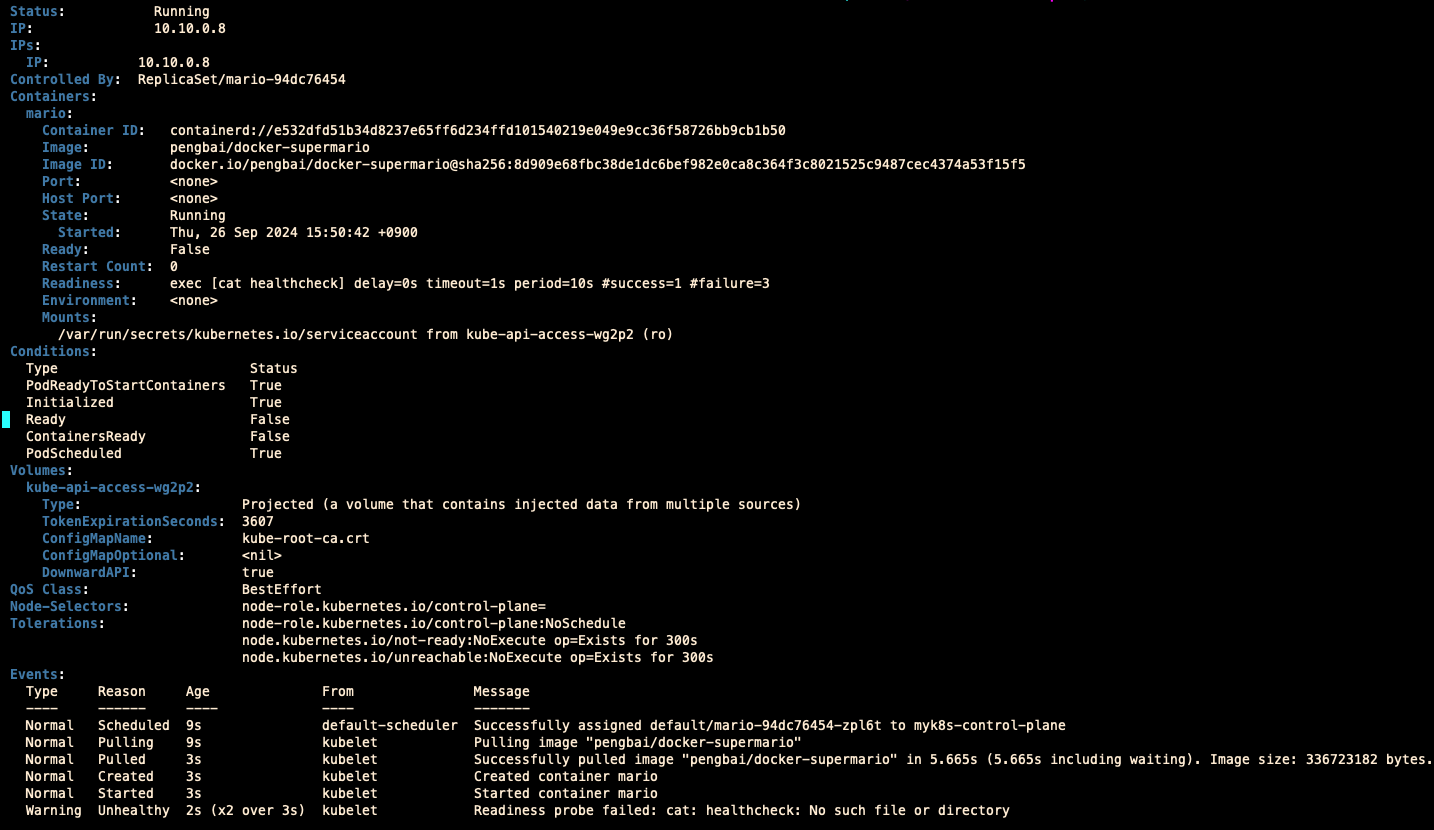

healthcheck 파일이 없어서 Readiness probe failed 발생합니다.

Endpoints, EndpointSlice 출력 정보 차이

⇒ EndpointSlice는 단일이 아니면서, 상태체크도 가능하게 하는거 같습니다.

kubectl describe ep

Name: kubernetes

Namespace: default

Labels: endpointslice.kubernetes.io/skip-mirror=true

Annotations: <none>

Subsets:

Addresses: 172.18.0.2

NotReadyAddresses: <none>

Ports:

Name Port Protocol

---- ---- --------

https 6443 TCP

Events: <none>

Name: mario

Namespace: default

Labels: <none>

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2024-09-26T06:36:41Z

Subsets:

Addresses: <none>

NotReadyAddresses: 10.10.0.7

Ports:

Name Port Protocol

---- ---- --------

mario-webport 8080 TCP

Events: <none>kubectl describe endpointslice

Name: kubernetes

Namespace: default

Labels: kubernetes.io/service-name=kubernetes

Annotations: <none>

AddressType: IPv4

Ports:

Name Port Protocol

---- ---- --------

https 6443 TCP

Endpoints:

- Addresses: 172.18.0.2

Conditions:

Ready: true

Hostname: <unset>

NodeName: <unset>

Zone: <unset>

Events: <none>

Name: mario-5hq49

Namespace: default

Labels: endpointslice.kubernetes.io/managed-by=endpointslice-controller.k8s.io

kubernetes.io/service-name=mario

Annotations: endpoints.kubernetes.io/last-change-trigger-time: 2024-09-26T06:36:41Z

AddressType: IPv4

Ports:

Name Port Protocol

---- ---- --------

mario-webport 8080 TCP

Endpoints:

- Addresses: 10.10.0.7

Conditions:

Ready: false

Hostname: <unset>

TargetRef: Pod/mario-94dc76454-wqnfb

NodeName: myk8s-control-plane

Zone: <unset>

Events: <none>TCP 5201, 측정시간 5초

kubectl exec -it deploy/**iperf3-client** -- **iperf3 -c iperf3-server -t 5** Connecting to host iperf3-server, port 5201 [ 5] local 10.10.1.4 port 58472 connected to 10.200.1.162 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 6.68 GBytes 57.4 Gbits/sec 1153 919 KBytes [ 5] 1.00-2.00 sec 5.89 GBytes 50.6 Gbits/sec 665 998 KBytes [ 5] 2.00-3.00 sec 7.11 GBytes 61.0 Gbits/sec 361 1001 KBytes [ 5] 3.00-4.00 sec 6.99 GBytes 60.0 Gbits/sec 700 1.01 MBytes [ 5] 4.00-5.00 sec 7.16 GBytes 61.5 Gbits/sec 1925 1.06 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-5.00 sec 33.8 GBytes 58.1 Gbits/sec 4804 sender [ 5] 0.00-5.00 sec 33.8 GBytes 58.1 Gbits/sec receiver iperf Done. kubectl logs -l **app=iperf3-server** -f [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 6.68 GBytes 57.4 Gbits/sec [ 5] 1.00-2.00 sec 5.89 GBytes 50.6 Gbits/sec [ 5] 2.00-3.00 sec 7.10 GBytes 61.0 Gbits/sec [ 5] 3.00-4.00 sec 6.99 GBytes 60.0 Gbits/sec [ 5] 4.00-5.00 sec 7.16 GBytes 61.5 Gbits/sec [ 5] 5.00-5.00 sec 512 KBytes 42.8 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-5.00 sec 33.8 GBytes 58.1 Gbits/sec receiverUDP 사용, 역방향 모드(-R)

kubectl exec -it deploy/**iperf3-client** -- **iperf3 -c iperf3-server -u -b 20G** Connecting to host iperf3-server, port 5201 [ 5] local 10.10.1.4 port 46844 connected to 10.200.1.162 port 5201 [ ID] Interval Transfer Bitrate Total Datagrams [ 5] 0.00-1.00 sec 262 MBytes 2.20 Gbits/sec 189543 [ 5] 1.00-2.00 sec 247 MBytes 2.08 Gbits/sec 179168 [ 5] 2.00-3.00 sec 257 MBytes 2.16 Gbits/sec 186135 [ 5] 3.00-4.00 sec 256 MBytes 2.15 Gbits/sec 185611 [ 5] 4.00-5.00 sec 241 MBytes 2.02 Gbits/sec 174501 [ 5] 5.00-6.00 sec 254 MBytes 2.13 Gbits/sec 183844 [ 5] 6.00-7.00 sec 261 MBytes 2.19 Gbits/sec 189325 [ 5] 7.00-8.00 sec 269 MBytes 2.26 Gbits/sec 194945 [ 5] 8.00-9.00 sec 249 MBytes 2.09 Gbits/sec 180506 [ 5] 9.00-10.00 sec 262 MBytes 2.20 Gbits/sec 189908 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams [ 5] 0.00-10.00 sec 2.50 GBytes 2.15 Gbits/sec 0.000 ms 0/1853486 (0%) sender [ 5] 0.00-10.00 sec 2.47 GBytes 2.12 Gbits/sec 0.005 ms 24360/1853486 (1.3%) receiver iperf Done. kubectl logs -l **app=iperf3-server** -f [ 5] 4.00-5.00 sec 235 MBytes 1.97 Gbits/sec 0.011 ms 4663/174506 (2.7%) [ 5] 5.00-6.00 sec 250 MBytes 2.10 Gbits/sec 0.007 ms 2470/183848 (1.3%) [ 5] 6.00-7.00 sec 257 MBytes 2.16 Gbits/sec 0.006 ms 2945/189317 (1.6%) [ 5] 7.00-8.00 sec 267 MBytes 2.24 Gbits/sec 0.006 ms 1244/194942 (0.64%) [ 5] 8.00-9.00 sec 246 MBytes 2.06 Gbits/sec 0.007 ms 2430/180508 (1.3%) [ 5] 9.00-10.00 sec 258 MBytes 2.17 Gbits/sec 0.006 ms 2908/189907 (1.5%) [ 5] 10.00-10.00 sec 25.5 KBytes 1.25 Gbits/sec 0.005 ms 0/18 (0%) - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams [ 5] 0.00-10.00 sec 2.47 GBytes 2.12 Gbits/sec 0.005 ms 24360/1853486 (1.3%) receiverTCP, 쌍방향 모드(-R)

kubectl exec -it deploy/**iperf3-client** -- **iperf3 -c iperf3-server -t 5 --bidir** [ 5] local 10.10.1.4 port 40332 connected to 10.200.1.162 port 5201 [ 7] local 10.10.1.4 port 40342 connected to 10.200.1.162 port 5201 [ ID][Role] Interval Transfer Bitrate Retr Cwnd [ 5][TX-C] 0.00-1.00 sec 1.12 GBytes 9.66 Gbits/sec 828 710 KBytes [ 7][RX-C] 0.00-1.00 sec 4.86 GBytes 41.7 Gbits/sec [ 5][TX-C] 1.00-2.00 sec 682 MBytes 5.72 Gbits/sec 0 758 KBytes [ 7][RX-C] 1.00-2.00 sec 5.75 GBytes 49.3 Gbits/sec [ 5][TX-C] 2.00-3.00 sec 650 MBytes 5.45 Gbits/sec 489 512 KBytes [ 7][RX-C] 2.00-3.00 sec 5.51 GBytes 47.3 Gbits/sec [ 5][TX-C] 3.00-4.00 sec 655 MBytes 5.50 Gbits/sec 0 614 KBytes [ 7][RX-C] 3.00-4.00 sec 6.28 GBytes 54.0 Gbits/sec [ 5][TX-C] 4.00-5.00 sec 685 MBytes 5.74 Gbits/sec 170 663 KBytes [ 7][RX-C] 4.00-5.00 sec 6.04 GBytes 51.9 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID][Role] Interval Transfer Bitrate Retr [ 5][TX-C] 0.00-5.00 sec 3.73 GBytes 6.41 Gbits/sec 1487 sender [ 5][TX-C] 0.00-5.00 sec 3.73 GBytes 6.40 Gbits/sec receiver [ 7][RX-C] 0.00-5.00 sec 28.4 GBytes 48.9 Gbits/sec 231 sender [ 7][RX-C] 0.00-5.00 sec 28.4 GBytes 48.9 Gbits/sec receiver iperf Done. kubectl logs -l **app=iperf3-server** -f [ 5][RX-S] 2.00-3.00 sec 650 MBytes 5.45 Gbits/sec [ 8][TX-S] 2.00-3.00 sec 5.51 GBytes 47.3 Gbits/sec 0 4.78 MBytes [ 5][RX-S] 3.00-4.00 sec 655 MBytes 5.49 Gbits/sec [ 8][TX-S] 3.00-4.00 sec 6.28 GBytes 54.0 Gbits/sec 46 4.78 MBytes [ 5][RX-S] 4.00-5.00 sec 684 MBytes 5.74 Gbits/sec [ 8][TX-S] 4.00-5.00 sec 6.04 GBytes 51.9 Gbits/sec 181 4.78 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID][Role] Interval Transfer Bitrate Retr [ 5][RX-S] 0.00-5.00 sec 3.73 GBytes 6.40 Gbits/sec receiver [ 8][TX-S] 0.00-5.00 sec 28.4 GBytes 48.9 Gbits/sec 231 senderTCP 다중 스트림(30개), -P(number of parallel client streams to run)

kubectl exec -it deploy/**iperf3-client** -- **iperf3 -c iperf3-server -t 10 -P 2** Connecting to host iperf3-server, port 5201 [ 5] local 10.10.1.4 port 39080 connected to 10.200.1.162 port 5201 [ 7] local 10.10.1.4 port 39096 connected to 10.200.1.162 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-1.00 sec 2.96 GBytes 25.4 Gbits/sec 82 643 KBytes [ 7] 0.00-1.00 sec 2.95 GBytes 25.3 Gbits/sec 330 583 KBytes [SUM] 0.00-1.00 sec 5.90 GBytes 50.7 Gbits/sec 412 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 2.47 GBytes 21.2 Gbits/sec 385 669 KBytes [ 7] 1.00-2.00 sec 2.45 GBytes 21.1 Gbits/sec 561 619 KBytes [SUM] 1.00-2.00 sec 4.92 GBytes 42.3 Gbits/sec 946 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 2.30 GBytes 19.8 Gbits/sec 579 679 KBytes [ 7] 2.00-3.00 sec 2.29 GBytes 19.7 Gbits/sec 352 672 KBytes [SUM] 2.00-3.00 sec 4.59 GBytes 39.5 Gbits/sec 931 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 2.36 GBytes 20.3 Gbits/sec 0 764 KBytes [ 7] 3.00-4.00 sec 2.34 GBytes 20.1 Gbits/sec 1 699 KBytes [SUM] 3.00-4.00 sec 4.70 GBytes 40.4 Gbits/sec 1 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 2.62 GBytes 22.5 Gbits/sec 383 860 KBytes [ 7] 4.00-5.00 sec 2.59 GBytes 22.3 Gbits/sec 0 700 KBytes [SUM] 4.00-5.00 sec 5.21 GBytes 44.8 Gbits/sec 383 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 2.32 GBytes 20.0 Gbits/sec 3 878 KBytes [ 7] 5.00-6.00 sec 2.31 GBytes 19.9 Gbits/sec 47 701 KBytes [SUM] 5.00-6.00 sec 4.64 GBytes 39.8 Gbits/sec 50 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 2.56 GBytes 22.0 Gbits/sec 0 938 KBytes [ 7] 6.00-7.00 sec 2.54 GBytes 21.8 Gbits/sec 0 703 KBytes [SUM] 6.00-7.00 sec 5.10 GBytes 43.8 Gbits/sec 0 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 2.47 GBytes 21.2 Gbits/sec 2 967 KBytes [ 7] 7.00-8.00 sec 2.46 GBytes 21.1 Gbits/sec 2 708 KBytes [SUM] 7.00-8.00 sec 4.92 GBytes 42.3 Gbits/sec 4 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 2.51 GBytes 21.5 Gbits/sec 0 967 KBytes [ 7] 8.00-9.00 sec 2.50 GBytes 21.5 Gbits/sec 1 717 KBytes [SUM] 8.00-9.00 sec 5.00 GBytes 43.0 Gbits/sec 1 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 2.58 GBytes 22.2 Gbits/sec 1 1008 KBytes [ 7] 9.00-10.00 sec 2.56 GBytes 22.0 Gbits/sec 2 755 KBytes [SUM] 9.00-10.00 sec 5.14 GBytes 44.2 Gbits/sec 3 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-10.00 sec 25.1 GBytes 21.6 Gbits/sec 1435 sender [ 5] 0.00-10.00 sec 25.1 GBytes 21.6 Gbits/sec receiver [ 7] 0.00-10.00 sec 25.0 GBytes 21.5 Gbits/sec 1296 sender [ 7] 0.00-10.00 sec 25.0 GBytes 21.5 Gbits/sec receiver [SUM] 0.00-10.00 sec 50.1 GBytes 43.1 Gbits/sec 2731 sender [SUM] 0.00-10.00 sec 50.1 GBytes 43.1 Gbits/sec receiver iperf Done. kubectl logs -l **app=iperf3-server** -f [SUM] 9.00-10.00 sec 5.14 GBytes 44.2 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 10.00-10.00 sec 1.13 MBytes 16.3 Gbits/sec [ 8] 10.00-10.00 sec 1.25 MBytes 18.1 Gbits/sec [SUM] 10.00-10.00 sec 2.38 MBytes 34.3 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 25.1 GBytes 21.6 Gbits/sec receiver [ 8] 0.00-10.00 sec 25.0 GBytes 21.5 Gbits/sec receiver [SUM] 0.00-10.00 sec 50.1 GBytes 43.1 Gbits/sec receiver